Large language models (LLM) are revolutionizing industries, shaping how startups and businesses communicate and process data. However, these advancements also present distinctive challenges that require effective navigation. In a rapidly evolving technological landscape where LLMs are redefining the rules of the game, embracing these innovations can pave the path to success for businesses.

Aloa, an expert in software outsourcing, guides startups to overcome the challenges posed by a large language model. Their expertise enables businesses to harness the power of LLMs for innovation and efficiency. Aloa offers tailored solutions that streamline the integration of LLMs into diverse applications. With their extensive knowledge, Aloa simplifies complexities and overcomes challenges, propelling startups and businesses towards efficient human-like communication and data processing.

This blog explores the fascinating world of large language models. It delves into its types and sheds light on how they understand and generate text that resembles human language. Additionally, we will examine the challenges associated with these models and offer insights on how startups can successfully navigate them.

Let's get started!

What Is a Large Language Model?

A large language model is a groundbreaking artificial intelligence (AI) innovation that has revolutionized how computers understand and generate human language. This type of neural network possesses remarkable versatility to comprehend, analyze, and produce text like a human.

In the past, language processing heavily relied on rule-based systems that followed pre-defined instructions. However, these systems faced limitations in capturing the intricate and nuanced aspects of human language—a significant breakthrough came with the emergence of deep learning and neural networks. One notable transformer architecture, exemplified by models like GPT-3 (Generative Pre-trained Transformer 3), which brought about a transformative shift.

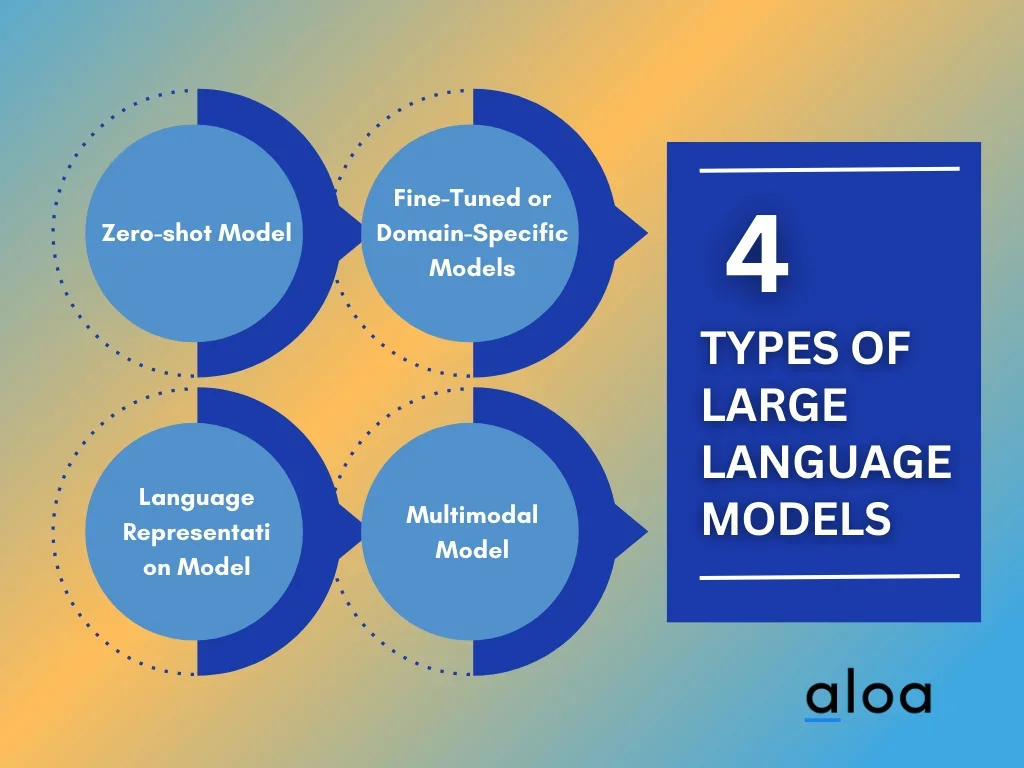

Types of Large Language Models

Let us delve into the different categories of these impactful large language models as they continue to make waves within the realms of artificial intelligence.

Zero-shot Model

The zero-shot model is an intriguing development in large language models. It possesses the remarkable ability to perform tasks without specific fine-tuning, demonstrating its capability to adapt and generalize understanding to new and untrained tasks. This achievement is accomplished through extensive pre-training on vast amounts of data, allowing it to establish relationships between words, concepts, and contexts.

Fine-Tuned or Domain-Specific Models

Zero-shot models display a wide range of adaptability, but fine-tuned or domain-specific models adopt a more targeted approach. These models undergo training specifically for specific domains or tasks, refining their understanding to excel in those areas. For example, a large language model can be fine-tuned to excel in analyzing medical texts or interpreting legal documents. This specialization greatly enhances their effectiveness in delivering accurate results within specific contexts. Fine-tuning paves the way for improved accuracy and efficiency in specialized fields.

Language Representation Model

Language representation models form the foundation of numerous extensive language models. These models are trained to comprehend linguistic subtleties by acquiring the ability to represent words and phrases in a multidimensional space. This facilitates capturing connections between words, such as synonyms, antonyms, and contextual meanings. Consequently, these models can grasp the intricate layers of meaning in any given text, enabling them to generate coherent and contextually appropriate responses.

Multimodal Model

Technology continues to advance, and with it, the integration of various sensory inputs becomes increasingly essential. Multimodal models go beyond language understanding by incorporating additional forms of data like images and audio. This fusion allows the model to comprehend and generate text while interpreting and responding to visual and auditory cues. The applications of multimodal mode span diverse areas such as image captioning, where the mode generates textual descriptions for images, and conversational AI that effectively responds to both text and voice inputs. These models bring us closer to developing AI systems capable of emulating human-like interactions with greater authenticity.

Challenges and Limitations of Large Language Models

Large language models have brought about a revolution in AI and natural language processing. However, despite their significant advancements, these expansive systems of chatbot technology like ChatGPT are not without challenges and limitations. While they have opened up new avenues for communication, they also encounter obstacles that require careful consideration.

Complexity in Computation and Training Data

One of the primary challenges arises from the intricate nature of the large language model. These models possess complex neural architectures, requiring significant computational resources for training and operation. Additionally, gathering extensive training data necessary to fuel these models is daunting. While the internet serves as a valuable source of information, ensuring data quality and relevance remains an ongoing challenge.

Bias and Ethical Concerns

Large language model is susceptible to biases found in their training data. Unintentionally, these biases may persist in the content they learn from, leading to potential issues with response quality and undesirable outcomes. Such biases can reinforce stereotypes and spread misinformation, thereby raising ethical concerns. It underscores the need for meticulous evaluation and fine-tuning of these models.

Lack of Understanding and Creativity

Despite their impressive capabilities, the large language model struggles with proper understanding and creativity. These models generate responses by relying on patterns learned from the training data, which can sometimes result in answers that sound plausible but are factually incorrect. Unfortunately, this limitation affects their ability to engage in nuanced discussions, provide original insights, or fully grasp contextual subtleties.

Need for Human Feedback and Model Interpretability

Human feedback plays a pivotal role in enhancing a large language model. Although these models can generate text independently, human guidance is crucial to guarantee coherent and accurate responses. Moreover, addressing the challenge of interpretability is essential in order to establish trust and identify potential errors by understanding how a model reaches specific answers.

Features of Large Language Model

Large language model possesses the ability to comprehend and generate text that closely resembles human expression. To fully grasp their significance, let us explore the remarkable features that characterize these models and establish them as vital assets in modern language processing.

Natural Language Understanding

Large language models rely on two key aspects to achieve exceptional natural language understanding, which is considered one of their cornerstones.

- Contextual Word Representations: To truly grasp the nuanced meanings of words, a large language model takes into account the context in which they appear. Unlike traditional methods that isolate words, these models analyze words by considering their surrounding words. This approach leads to more accurate interpretations and a deeper understanding of language.

- Semantic Understanding: These models can understand the meaning of sentences and paragraphs, allowing them to grasp the underlying concepts and extract relevant information. This understanding enables more advanced and contextually appropriate interactions.

Text Generation Capabilities

Large language model is extremely proficient at producing text that is both coherent and contextually relevant. This remarkable capability has led to the development of numerous applications encompassing a wide range of uses.

- Creative Writing: Language models excel at exhibiting their artistic abilities across various domains. They seamlessly channel their creativity by crafting gripping narratives, penning captivating poetry, and even composing melodic lyrics.

- Code Generation: These models have demonstrated their coding abilities by generating code snippets from textual descriptions. This capability greatly benefits developers, as it accelerates the software development process.

- Conversational Agents: Advanced chatbots and virtual assistants rely on a large language model as their foundation. These sophisticated systems are capable of engaging in human-like conversations, providing customer support, answering inquiries, and assisting users across various industries.

Multilingual and Cross-Domain Competence

Language models with large capacities have the remarkable ability to overcome language barriers effortlessly and adapt flawlessly to different domains. This leads to significant advancements in various areas, as discussed below

- Breaking Language Barriers: These models revolutionize communication by providing real-time translation, ensuring information is easily accessible to a global audience in their native languages. Consequently, they foster effective collaboration and facilitate seamless interactions across borders.

- Adapting to Different Domains: These models possess the remarkable ability to swiftly adapt to various subject matters. From medical information to legal documents, they can effortlessly generate accurate and domain-specific content, making them highly versatile across diverse industries. This versatility dramatically enhances their usability and applicability.

Uses of Large Language Model

Large language models have gained prominence as transformative tools with a wide range of applications. These models harness the power of machine learning and natural language processing to comprehend and generate text that closely resembles human expression. Let us delve into how these models are revolutionizing various tasks involving text and transforming interactions.

Text Generation and Completion

Large language models have brought a new era of text generation and completion. These models possess an inherent capability to comprehend context, meaning, and the subtle intricacies of language. As a result, they can produce coherent and contextually relevant text. Their exceptional aptitude has found practical applications across various domains.

- Writing Assistance: Professional and amateur writers experience the benefits of utilizing large language models. These models have the capability to suggest appropriate phrases, sentences, or even whole paragraphs, simplifying the creative process and elevating the quality of written content.

- Improved Version: Language models have revolutionized content creation by assisting creators in generating captivating and informative text. By analyzing vast amounts of data, these models can customize content to cater to specific target audiences.

Question Answering and Information Retrieval

Large language model is rapidly advancing in the fields of question-answering and information retrieval. Their remarkable ability to understand human language allows them to extract pertinent details from vast data repositories.

- Virtual Assistants: It is powered by a large language model that offers a convenient solution for users seeking accurate and relevant information. These advanced AI systems can seamlessly assist with various tasks, such as checking the weather, discovering recipes, or addressing complex inquiries. Through their ability to comprehend context and generate appropriate responses, these virtual assistants facilitate smooth human-AI interactions.

- Search Engines: These are the foundation of digital exploration, relying on their unparalleled ability to comprehend user queries and deliver pertinent outcomes. The efficiency of these search platforms is further heightened through the utilization of extensive language models, which continuously refine algorithms to furnish more precise and personalized search results.

Sentiment Analysis and Opinion Mining

Understanding human sentiment and opinions holds immense significance across different contexts, ranging from shaping brand perception to conducting market analysis. Utilizing a large language model provides powerful tools for effectively analyzing sentiment within textual data.

- Social Media Monitoring: It allows businesses and organizations to utilize advanced language models for analyzing and monitoring sentiments expressed on social platforms. This valuable tool enables them to assess public opinions, track brand sentiment, and through social media feeds, and make well-informed decisions.

- Brand Perception Analysis: Large language model assesses brand sentiment by analyzing customer reviews, comments, and feedback. This valuable analysis helps companies refine their products, services, and marketing strategies based on public perception.

How To Implement Large Language Model In Your Process

Integrating a large language model into your processes brings forth many possibilities. These advanced AI systems, referred to as large language models, possess the ability to comprehend and generate text that closely resembles human speech. Their potential spans across diverse domains, making them invaluable tools for productivity and innovation enhancement. In this guide, we will provide you with step-by-step instructions on how to seamlessly incorporate a large language model into your workflow, harnessing its capabilities to drive remarkable outcomes.

Step 1: Determine Your Use Case

To successfully implement a large language model, one must first identify their specific use case. This crucial step helps in understanding the requirements and guides the selection of an appropriate large language model while adjusting parameters for optimal results. Some typical applications of LLMs include machine translation, chatbot implementation, natural language inference, computational linguistics, and more. Exploring how to create your own custom-build personal LLM allows developers to tailor solutions specifically to their needs, enabling greater customization and efficiency in various AI-driven tasks.

Step 2: Choose the Right Model

Various large language models are available for selection. Among the popular choices are GPT by OpenAI, BERT (Bidirectional Encoder Representations) by Google, and Transformer-based models. Each large language model possesses unique strengths and is tailored for specific tasks. Conversely, Transformer models stand out with their self-attention mechanism, which proves valuable for comprehending contextual information within text.

Step 3: Access the Model

Once you have selected the appropriate model, the subsequent step involves accessing it. Numerous LLMs are accessible as open-source options on platforms like GitHub. For instance, accessing OpenAI's models can be done through their API or by downloading Google's BERT model from their official repository. In case the desired large language model is not available as open-source, reaching out to the provider or obtaining a license may be necessary.

Step 4: Preprocess Your Data

To effectively utilize the large language model, one must first make necessary preparations with the data. This involves eliminating irrelevant information, rectifying errors, and transforming the data into a format that the large language model can readily comprehend. Such meticulous steps are crucial as they hold significant influence over the performance of the model by shaping its input quality.

Step 5: Fine-tune the Model

Once your data is prepared, the large language model fine-tuning process can commence. This crucial step optimizes the model's parameters specifically for your use case. While this process may be time-consuming, it is essential in achieving optimal results. It may require experimentation with different settings and training the model on various datasets to discover the ideal configuration.

Step 6: Implement the Model

After fine-tuning the model, you can integrate it into your process. This can involve embedding the large language model into your software or setting it up as a standalone service that your systems can query. Ensure the model is compatible with your infrastructure and can handle the required workload.

Step 7: Monitor and Update the Model

Once the large language model is implemented, it becomes crucial to monitor its performance and make necessary updates. New data availability can render machine learning models outdated. Therefore, regular updates are essential for maintaining optimal performance. Furthermore, adjusting the model's parameters may be required as your requirements evolve.

Key Takeaway

In the realm of modern AI, it is evident that a large language model serves as an extraordinary example of neural networks and natural language processing capabilities. Their remarkable aptitude to comprehend and generate text similar to human expression holds immense potential across a myriad of industries.

Businesses and startups are tapping into the potential of these models, creating a wave of innovation and efficiency across industries. From automated content creation to improved customer interactions and gaining insights from textual data, the large language model is on the verge of reshaping how we use AI. Don't fall behind in the ever-evolving tech landscape embrace this marvel of AI and explore its versatile applications.

If you need any further insights on implementing and utilizing a large language model, feel free to reach out to [email protected]. Our team of experts is here to assist you in navigating the fascinating world of large language models and providing the necessary information for effectively harnessing their power.