Generative AI adoption has moved past experimentation. 78% of companies already use it in at least one function. But for mid-market firms, the story isn’t simple: 92% say they’ve hit roadblocks along the way. Add in team concerns about job changes, skill gaps, or just keeping up, and you’re still on the hook to show ROI without disrupting daily work.

At Aloa, we work closely with mid-market companies facing that same mix of urgency and caution. What we’ve learned is that the leaders who succeed take a measured approach. They set clear goals, run focused pilots, and integrate tools securely, all while preparing their teams to grow alongside the technology.

This guide is designed as your step-by-step playbook. We’ll look at how to assess readiness, design a phased roadmap, put governance in place, train people, and track ROI. By the end, you’ll have a clear path for adoption that’s practical, safe, and sustainable.

Assessing Organizational Readiness

Generative AI adoption often starts in content-heavy areas. Marketing teams use it to generate emails and product copy, service teams use it to handle routine requests, and product groups use it to draft specs or documentation. With good prompts and clean data, output speeds up without losing consistency. And you set a baseline to track the state of generative AI inside your company.

But interest doesn’t equal readiness. Many companies discover they need at least a year to iron out data access, governance, and training before scaling. To save yourself frustration, we recommend asking three simple questions: is our infrastructure ready, are our people ready, and is our culture ready?

Technical Infrastructure Assessment

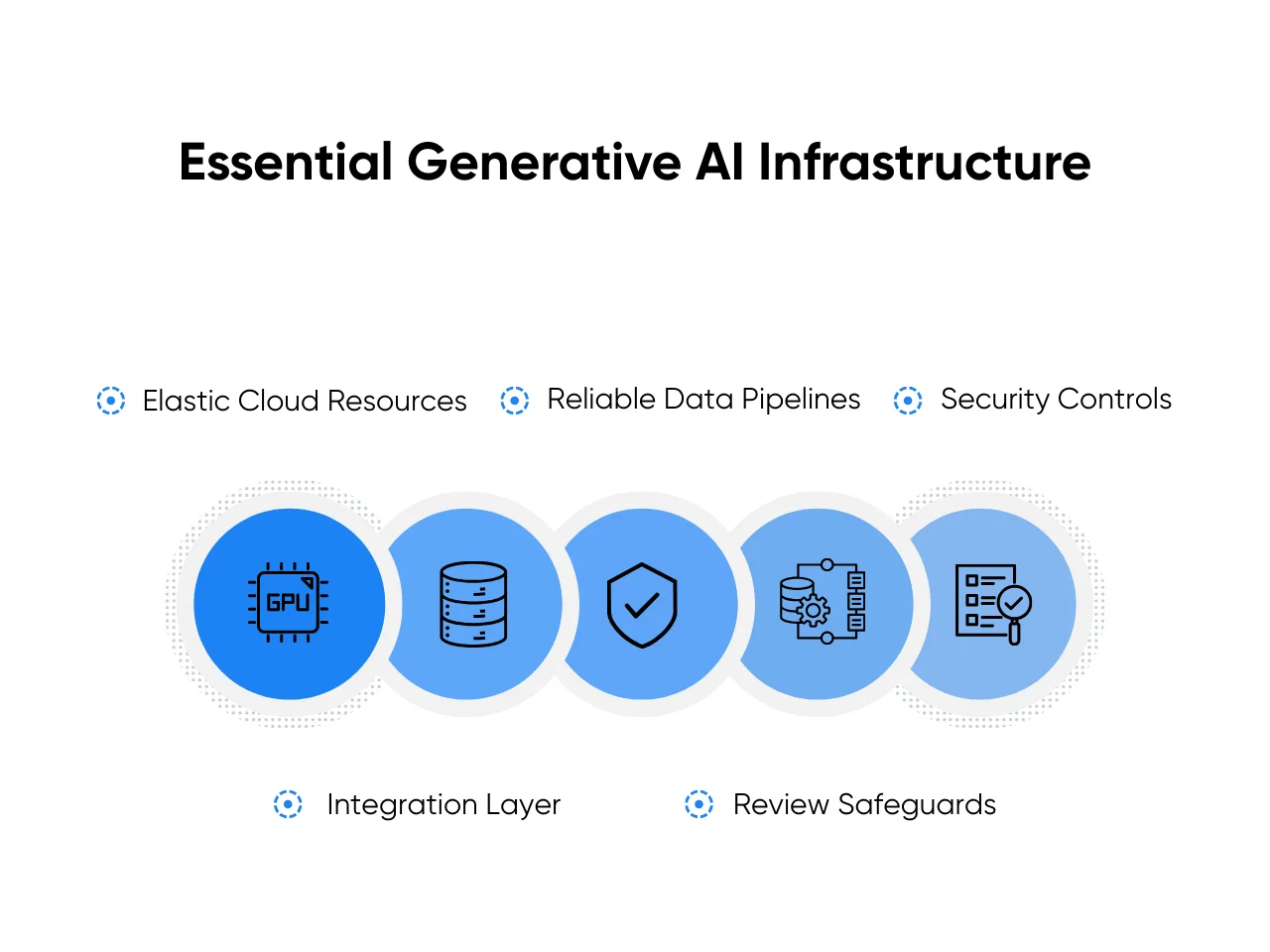

Think of your infrastructure like the plumbing in your house. You don’t notice it until there’s a leak. For generative AI, the “leaks” are usually integration bottlenecks or security gaps. Before moving forward, make sure you have:

- Elastic Cloud Resources: Enough CPU/GPU headroom to support model workloads.

- Reliable Data Pipelines: Clean, labeled, compliant streams your models can trust.

- Security Controls: Single sign-on, role-based access, and encryption baked in.

- Integration Layer: API gateways and monitoring so your systems talk to each other without slowing down.

- Review Safeguards: Human oversight for sensitive or high-risk outputs.

Rather than betting big, we suggest running a small pilot to stress-test these basics. Many leaders have found their first pilot exposed overlooked gaps: integration limits, missing audit logs, or tight cloud quotas.

The key is catching those gaps before scale makes them expensive. At Aloa, we help teams establish the right guardrails upfront and guide you through the technical details.

Skills Gap Analysis

Bain reports that the average mid-sized firm now has about 160 employees using generative AI tools daily, a 30% jump in one year. To see where you stand, rate your team on a simple 1–5 scale:

- Prompt Design: Can your team write and refine prompts that get reliable outputs?

- Data Literacy: Do they know how to prepare and interpret data without bias?

- Evaluation: Can they measure accuracy, run tests, and monitor drift?

- Governance: Do they understand privacy, IP, and compliance basics?

- Change Leadership: Are managers able to guide teams through new workflows?

Anyone scoring a 1–2 should get immediate training, 3–4s can join pilots, and 5s are ready to mentor. Companies that run this type of rating exercise and pair rollouts with quick learning modules tend to see adoption feel less like a disruption and more like a natural extension of daily work.

Cultural Readiness Evaluation

Even with the right tools and skills, we suggest looking for three signals to gauge your team’s readiness for transformation:

- Trust and Transparency: Do your people know what AI will and won’t do, and how outputs are reviewed?

- Participation: Are you involving frontline teams in pilots and sharing results openly?

- Guardrails: Have you made it clear what data and vendors are safe to use?

What is often the case is that most executives believe transformation will occur without issues, but less than half of employees agree. That disconnect can slow everything down during implementation. It takes frequent communication for teams to feel equipped rather than replaced.

For example, Klarna introduced an AI assistant for routine inquiries, while training agents to handle more complex cases. By framing the tool as a partner, not a replacement, they cut response times while employee satisfaction actually improved. That balance (clear scope plus skills investment) helps adoption stick.

Building Your Implementation Roadmap

You’ve checked readiness, great. But the teams that succeed don’t launch AI everywhere on day one. They find proof that it’s working and build momentum naturally. We’ll break down the ideal AI launch roadmap here:

Phase-Based Implementation Approach

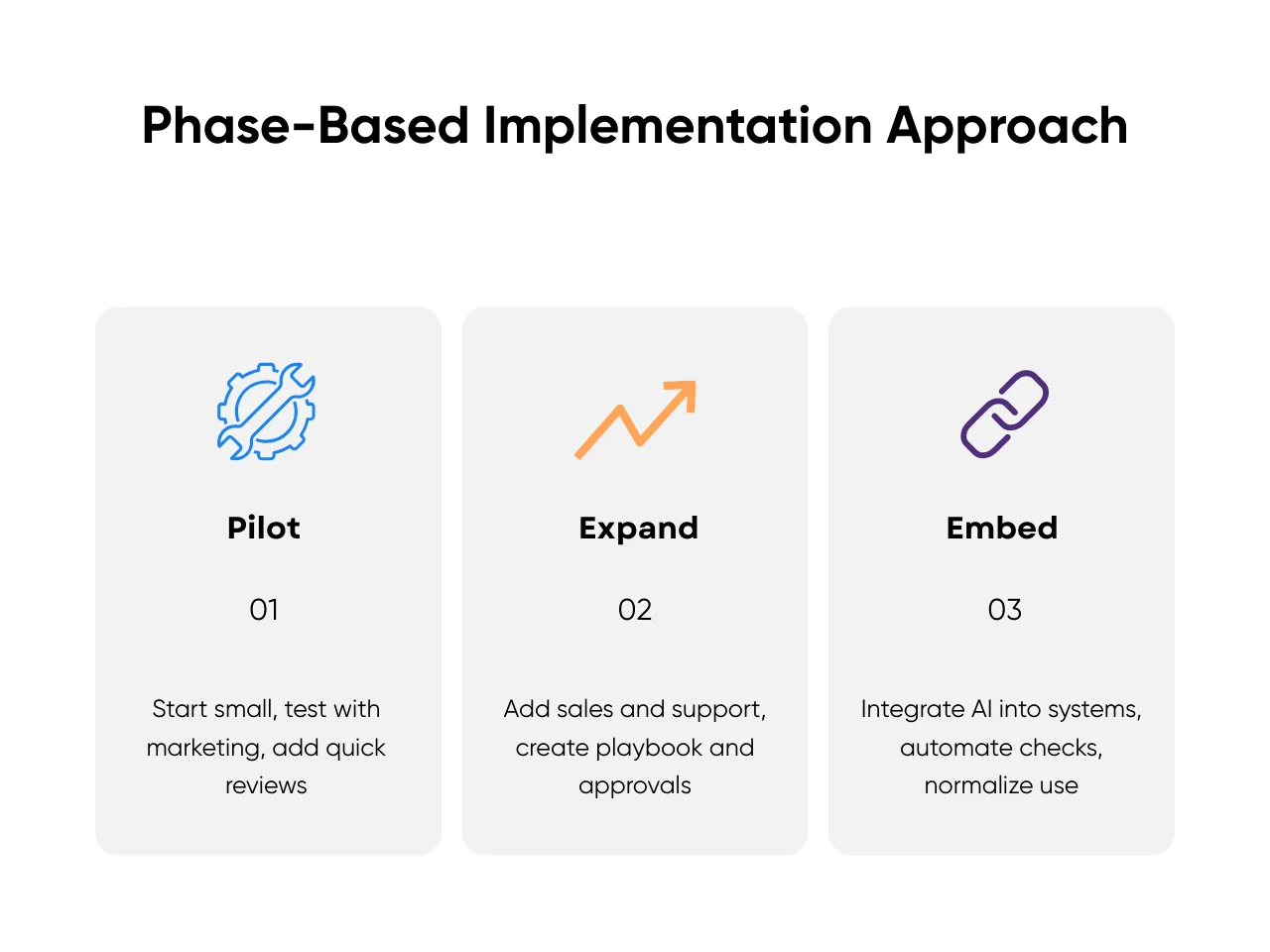

Think in three moves:

- Pilot: Start with one workflow and a small group. A popular first step is marketing content, like product descriptions or campaign copy. Train for brand voice, add a quick review queue, and compare outputs to your current process. This keeps risk low and makes wins visible.

- Expand: When the pilot holds, add two or three nearby workflows. Sales might use AI to summarize call notes. Customer care can draft replies to high-volume tickets. Create a simple playbook: prompt library, red flags to watch, and who signs off.

- Embed: Fold AI into core systems. Connect to your knowledge base, analytics stack, and identity access controls. Turn manual checks into routine audits. At this point, gen AI stops being ‘the tool’ and becomes part of how work gets done.

Resource Allocation Guidelines

Here’s how you can distribute your costs:

- Pilot Costs: Cover data prep, integrations, security review, and a starter training block. Name owners for prompt quality, model settings, and release gates.

- Scale-Up Fund: Reserve budget to move the next two or three workflows once the pilot proves out. Line items often include change management, extra connectors, and expanded access.

- Ongoing Operations: Hold a consistent slice for training refreshers, monitoring, and model updates. Treat it like maintenance. Without tune-ups, quality drifts and confidence drops.

At Aloa, we design proof-of-concept pilots, map the next moves, and budget for ongoing care so generative AI projects scale instead of stalling.

Progress Measurement Frameworks

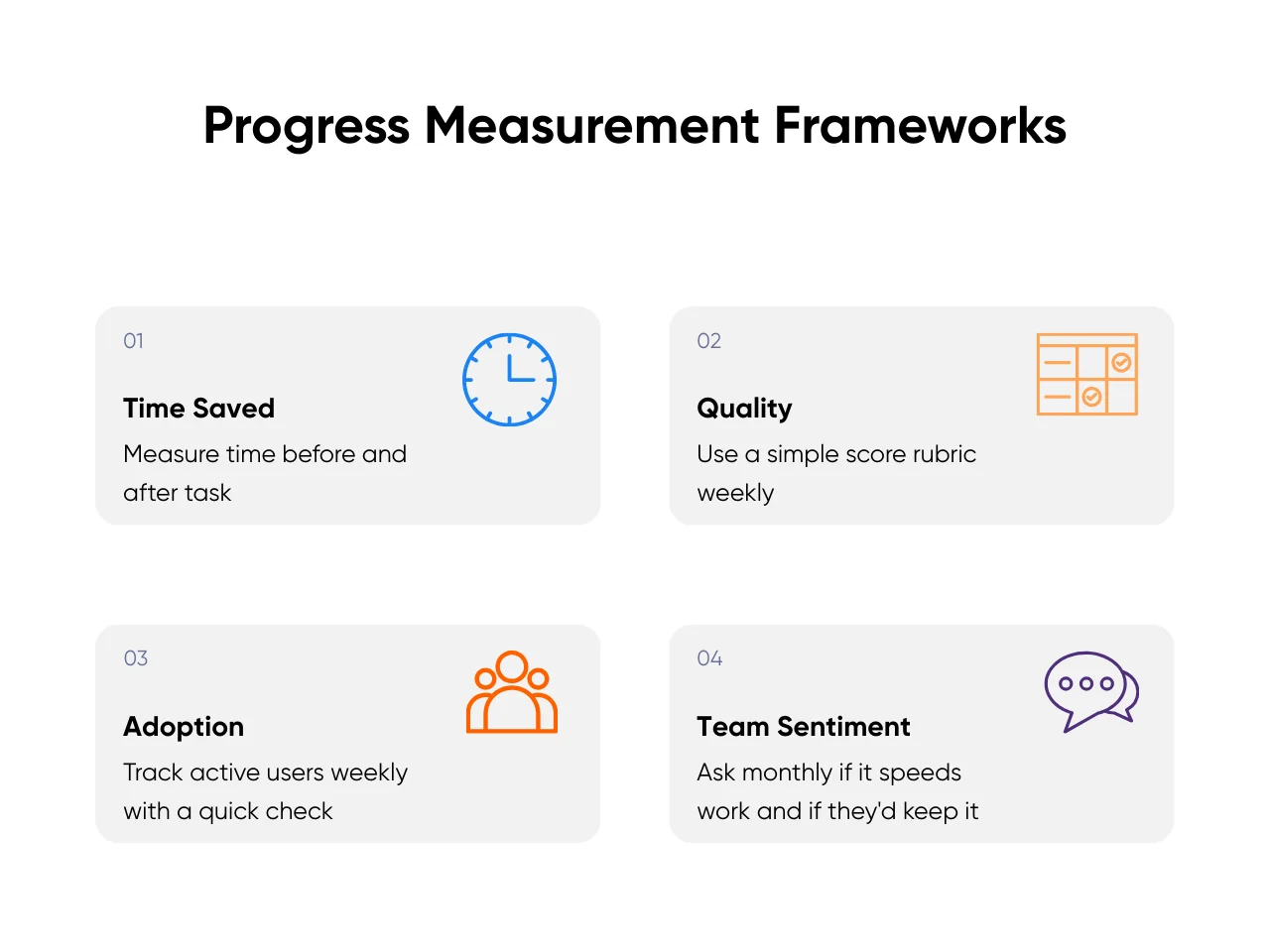

Set your baseline, then track four signals from day one:

- Time Saved: Pick a task and run a time-and-motion check before and after.

- Quality: Create a simple rubric. Example criteria: accuracy, tone, compliance notes. Score a sample each week.

- Adoption: Monitor weekly active users, not just licenses. Add a quick “still using this?” pulse in your tool.

- Team Sentiment: Ask two questions monthly: “Did this speed you up?” and “Would you keep it?” Comments matter more than charts.

Make the results easy to share. One slide for quick reads and a full report when stakeholders want details. Deloitte’s work on AI KPIs echoes this point: clear, agreed metrics are what unlock ongoing support.

Risk Management and Governance

AI projects run smoother when risks are managed early. That means giving people clear ownership, setting responsibilities, and keeping regular check-ins. Governance adds simple rules and decision-makers to catch issues early and keep generative AI adoption on track.

Risk Assessment Methodology

Every AI use case is different, so it’s important to analyze the risk before you ship:

- Start with the obvious buckets: Inaccuracy, data leakage, cybersecurity, intellectual property misuse, bias, and “jailbreak” prompts. These are the recurring themes almost every team runs into.

- Rate each workflow: Low, medium, or high risk. A draft tool that suggests marketing copy? Probably low. A claims-processing model handling personal data? Definitely high.

- Build guardrails that match the tier:

- Low: Human review and logging are usually enough.

- Medium: Add PII redaction, access controls, and clear escalation rules.

- High: Add sandbox testing, encryption, incident response playbooks, and legal sign-off.

- Stress-test early: Use red-team prompts, run A/B comparisons, and document where the system breaks. Don’t wait for a customer to discover the flaws.

- Set stop rules: Define clear thresholds (like error rates or unusual latency) that trigger an automatic pause or escalation to human review.

Think of it as a traffic-light system: green to go, yellow to proceed carefully, red to halt until fixes land. Simple enough for everyone to follow, but strong enough to keep you out of headlines.

Governance Structure Recommendations

Strong governance doesn’t mean extra layers of bureaucracy. It means clear roles and one place where decisions get made:

- Executive Sponsor: Accountable for AI outcomes and budget. Without an exec on the hook, things drift.

- AI Product Owner: Runs the roadmap, sets the quality bar, and manages delivery.

- Security and Compliance Lead: Validates data use, vendors, and policies.

- Data Steward: Owns datasets, lineage, and retention rules.

- Engineering Lead: manages integrations, performance, and costs.

- Business Lead: Defines success metrics and signs off on outcomes.

Stand up two lightweight assets:

- AI Council: Five to seven people, short cadence, approves use cases, reviews incidents, decides go or no-go.

- Model Registry: One place to track models, prompts, datasets, versions, owners, and change history.

And if you’re experimenting with more autonomous or agentic AI workflows, start with read-only data, noncritical tasks, and human checkpoints. Then expand once you’ve proven the controls work.

At Aloa, we help teams spin up these starter structures (an initial council, a simple registry, and clear guardrails) so AI doesn’t sprawl without oversight.

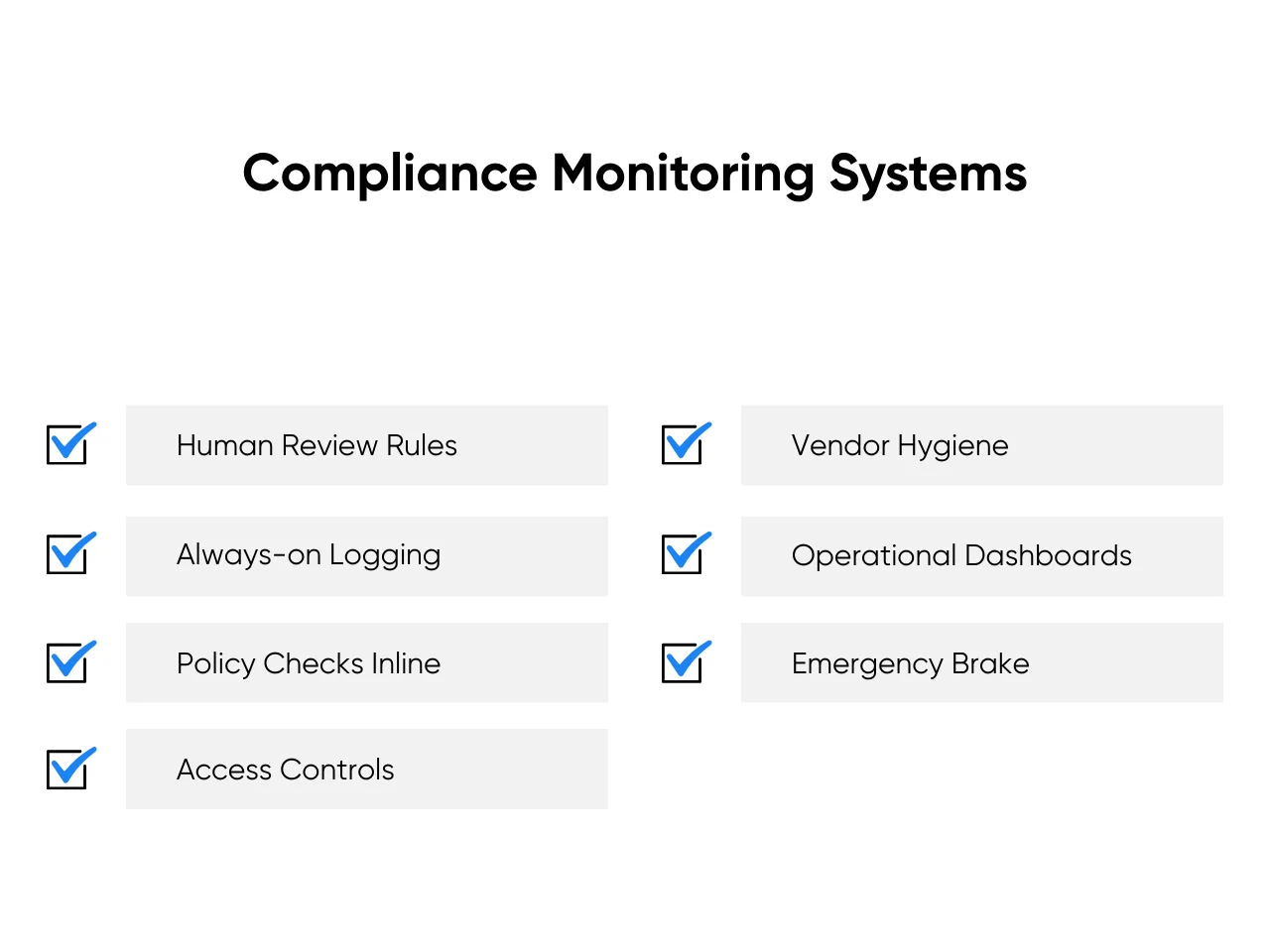

Compliance Monitoring Systems

Compliance can’t be a once-a-year audit. It needs to live in the flow of work:

- Human Review Rules: Specify which outputs must be checked before use. For example, all customer-facing content or all high-risk claims.

- Always-on Logging: Capture prompts, outputs, model versions, datasets, reviewers, and final decisions. If something goes wrong, you want the “black box recorder.”

- Policy Checks Inline: Run automated scans for PII, IP violations, and toxic content before publishing. It’s easier to block bad outputs upfront than to fix the fallout later.

- Access Controls: Use single sign-on, least-privilege permissions, and regular recertification of who gets to use what.

- Vendor Hygiene: Confirm contracts spell out data residency, deletion timelines, and security standards. Don’t assume. Verify.

- Operational Dashboards: Track edit rates, drift alerts, usage patterns, and cost per request. These metrics tell you whether the system is reliable and trusted.

- Emergency Brake: Have a “kill switch” to shut down a model or reroute to human if it misbehaves.

Compliance is especially critical in healthcare, where HIPAA and other rules leave no room for error. If you’re using generative AI in healthcare, you need systems that log activity, flag risks, and enforce policies so adoption doesn’t stall. For a deeper dive, check out our guide on AI in healthcare.

Change Management and Training

Tools don’t drive adoption. People do. Change lands when work gets easier, leaders stay visible, and training is practical.

Change Management Strategies

Rolling out AI isn’t just a software update; it reshapes how work gets done. That means fear, resistance, and “this will never work here” moments are normal. The key is to get ahead of them:

- Start small, show proof: Launch AI in one or two workflows where results are easy to measure. Share quick wins like hours saved, error rates reduced, or customer satisfaction bumps.

- Make it visible: Put numbers on a simple scoreboard. “300 hours saved last quarter” lands better than a vague “we’re piloting AI.”

- Involve people early: Appoint “AI champions” inside teams. They test, give feedback, and spread confidence.

- Address the real fear: Most employees aren’t scared of the tech; they’re scared of losing relevance. Position AI as an assistant, not a replacement. Show how it clears grunt work so staff can focus on judgment calls, creativity, or patient-facing time.

- Stay transparent: Share what’s automated, what isn’t, and how success will be judged. Silence breeds rumors.

When staff see AI saving time on the tasks everyone hates, trust builds quickly.

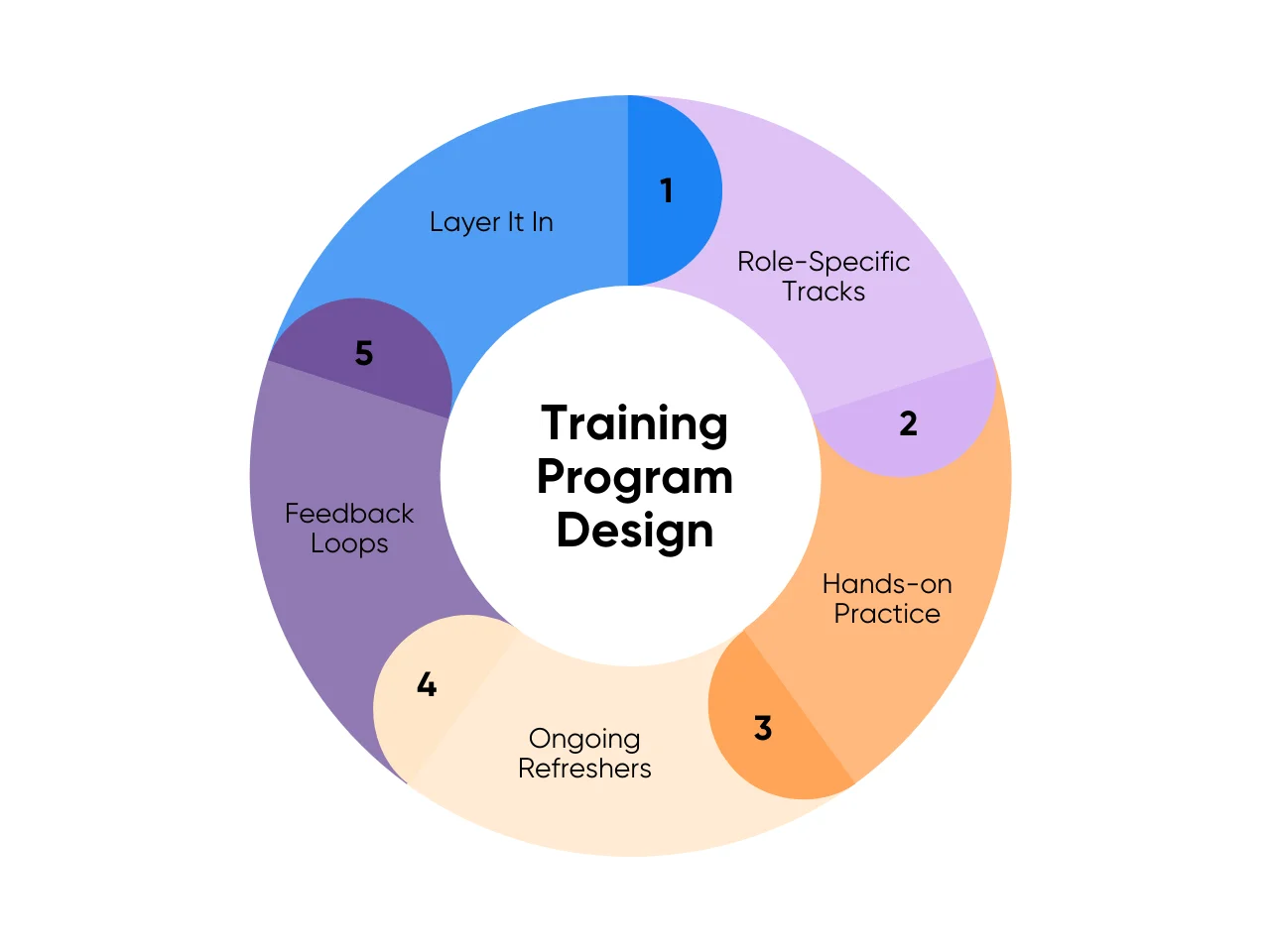

Training Program Design

Good training is more than a how-to guide. It’s about building comfort and confidence:

- Layer It In: Start with fundamentals, including how prompts work, what AI can and can’t do, and the red flags to watch for.

- Role-Specific Tracks: Design paths for different groups. A nurse needs to know how to interpret AI triage suggestions. A claims processor needs to know how to check for missing codes. A manager needs to know how to read adoption dashboards.

- Hands-on Practice: Give people real workflows to test with AI, not just slide decks. The goal is muscle memory, not theory.

- Ongoing Refreshers: AI evolves fast. Plan quarterly tune-ups to cover new features, new risks, and lessons learned from live use.

- Feedback Loops: Collect what’s working and what’s not, then adjust training. Nothing kills momentum like training that feels outdated or irrelevant.

Treat this like onboarding a new teammate. Everyone should know what the assistant is good at, where it struggles, and how to work together.

Adoption Measurement

Finally, track whether the cultural shift is real. Numbers tell one story, but behavior tells another:

- Usage: Weekly active users and session depth.

- Edit Rate: Percent of outputs needing major edits. Falling rates signal learning.

- Time to Complete: Before versus after on the same tasks. Publish the delta.

- Exception Routes: How often work is kicked back to humans and why. Fix patterns, not one-offs.

- Enablement Coverage: Who trained, who needs it, who is mentoring.

- Sentiment Pulse: Two questions monthly; “Did this help?” and “Should we keep it?”

Set simple thresholds. Green when targets are met, yellow when drift appears, red when stop rules trigger. Decisions get easier when the lights are clear.

Measuring Success and ROI

Getting AI live is an achievement. But adoption alone doesn’t prove value. The test is visible improvements: numbers leaders trust and gains teams feel day to day.

Quantitative Success Metrics

Leaders want proof that AI is moving the needle. Some of the most reliable signals include:

- Productivity Improvements: Are teams getting more done in less time? For example, marketing teams often see faster content creation, and service teams handle higher ticket volumes with the same headcount.

- Cost Metrics: Are operating costs going down, or is time being freed up for higher-value work? Even small efficiency gains add up quickly when applied across hundreds of workflows.

- Accuracy and Quality: Are mistakes dropping compared to the old process? Cleaner outputs mean fewer rework cycles and better customer experiences.

- Financial Return: Early adopters report measurable ROI within 6–12 months of implementation. That speed makes AI unusual compared to other major tech investments, which often take years to pay back.

The key is to capture baseline data before launch. Without a “before,” your “after” won’t mean much.

Qualitative Success Indicators

Numbers alone don’t capture adoption health. Culture and sentiment often determine whether AI sticks. Useful checks include:

- Employee Satisfaction: Do staff feel AI is lightening their load or creating new friction? Short pulse surveys can reveal this in days, not quarters.

- Customer Experience: Are response times improving? Are communications clearer and more consistent?

- Cultural Signals: Is curiosity growing? Do teams volunteer ideas for new use cases instead of avoiding the tools?

When people feel AI is helping them, adoption spreads on its own.

ROI Tracking Systems

ROI shouldn’t be a one-off slide at the end of a pilot. It needs to be part of how you run the program. That means:

- Dashboards: Track time saved, adoption rates, and error reductions in real time. These give leaders a live view of progress.

- Pulse Surveys: Check staff sentiment regularly. They’re quick, actionable, and show whether change management is holding.

- Quarterly Reviews: Tie data back to strategic goals (efficiency, revenue growth, value creation, customer satisfaction, or innovation). This helps secure buy-in from stakeholders who may not see the day-to-day details.

That’s why we always encourage clients to treat measurement as part of the build, not an afterthought. At Aloa, we design feedback loops that capture both the hard numbers and the human signals, so you’re never left guessing whether AI is worth the investment.

TL;DR: Success isn’t just whether the model works. It’s whether it saves time, reduces mistakes, boosts satisfaction, and returns dollars. When you track both outcomes and sentiment, you build a body of evidence that makes scaling easier and keeps leadership backing firmly behind you.

Key Takeaways

Generative AI adoption works when it’s structured. Start small, prove value, then scale with guardrails. That’s how pilots become durable capabilities instead of one-off wins.

Here’s a simple next-step plan:

- Run a quick readiness check across infrastructure, skills, and culture.

- Pick one use case with visible impact and low risk.

- Set four metrics up front: time saved, error rates, adoption, and team sentiment.

- Add light governance and training, then review weekly and expand or refine.

We can help you make your vision a reality. At Aloa, we work with mid-market teams to scope the first pilot, design a phased rollout, and set up training and guardrails so adoption sticks. Book a consultation and we’ll design a roadmap that fits your business.

FAQs

How fast is generative AI adoption and why the urgency?

Very fast. 78% of companies already use generative AI in at least one function, but 92% of mid-market firms hit challenges. The urgency comes from competitors moving fast and models improving every month, so you risk losing ground if you wait.

How do we check if we’re ready to adopt?

Look at four areas: tech (cloud, data, security), people (skills, champions, leaders), culture (trust, appetite for change), and budget (pilot, scale, ongoing ops). Most teams need time to firm up governance, training, and data access before going wide, so build that into the plan.

Should we build in-house or start with external tools?

Start with external platforms to move quickly, then add in-house capabilities as you mature. Many teams also use a multi-model approach so each task gets the right tool without vendor lock-in. Focus your internal effort on integration, governance, and training. A partner like Aloa can help scope the right balance and handle the technical heavy lifting while your team ramps up.

How much budget should we set aside?

Plan pilots in the $50,000–$500,000 range, depending on scope. Set aside 20–30% for ongoing ops, training, and optimization. Many teams see ROI in 6–12 months. For reference, see Aloa pricing for monthly and project-based options.

What should we measure to prove success?

Keep it simple: time saved, error rates, adoption, and team sentiment. Add cost impact and any function-specific metrics you care about. Baselines matter, and most reported use cases meet or beat expectations even as many companies are still chasing enterprise-level gains.