Ever notice how fast healthcare AI went from buzzword to daily reality? Eighty percent of hospitals are already using artificial intelligence to unclog workflows and boost quality care. And the market? Jumping from $26.6 billion in 2024 to almost $188 billion by 2030.

But the risk is just as real. Nobody wants to explain why the EHR froze halfway through morning rounds. (We’ve been in that room. It’s not fun.)

That's why at Aloa, we don’t hand over shiny demos that fall apart under pressure. We build healthcare AI around how your hospital actually works. We prototype fast, wire it clean into your IT stack, and train your team so adoption sticks.

And here’s what we’ll cover together:

- A plain breakdown of what AI applications in healthcare look like in 2025

- Real use cases you can run now (diagnostics, monitoring, admin)

- A rollout roadmap to pilot, measure, and scale without breaking workflows

- Proof points from hospitals already seeing the real benefits of AI

It’s a roadmap that’s practical, proven, and built for hospitals that don’t have time for aimless experiments.

Understanding AI's Role in Modern Healthcare

AI applications in healthcare help clinicians and staff make better decisions, faster. They spot patterns in patient data, support treatment choices, and automate routine tasks. By analyzing large sets of medical information, they can flag disease early, suggest tailored care plans, and lighten the load on care teams.

But let’s be real: AI is not some magic brain floating in the cloud. AI is just a set of sharp tools that notice things tired eyes might skip after hours of labs, scans, and notes. They don’t make the call; you do. Think of it like having a second set of eyes that never needs a break (well, almost never).

And it’s not only clinical. AI is helping with billing, scheduling, and supply chains. All the chores that burn hours but don’t heal anyone. It clears the clutter so you can stay focused on patients. You’ll see what this looks like in practice in these real-world AI use cases in healthcare.

Core AI Technologies in Healthcare

When we talk about the role of AI in healthcare, we’re not talking about robots making rounds with stethoscopes. We’re talking about three main types of AI in healthcare already at work:

- Machine Learning: Think of it as software that spots patterns in big piles of medical data. Instead of following a fixed script, it “learns” from past cases to make predictions. In healthcare, that can mean flagging a patient at high risk of readmission based on their vitals, history, and lab results. Some hospitals even use it to predict sepsis hours before symptoms show, basically giving staff a head start instead of a scramble.

- Natural Language Processing (NLP): This is AI that can actually read doctor-speak. Clinical notes are often messy, unstructured, and full of shorthand only insiders get. NLP turns that soup into clean, coded information you can actually search, bill, or study. Ambient scribe tools (speech recognition + NLP) listen during a visit and spit out a draft note for the clinician to check. Or NLP can chew through thousands of discharge summaries to spot common complications so quality teams don’t have to.

- Computer Vision: This is AI that “sees,” often powered by deep learning. It analyzes medical images like X-rays, CT scans, or MRIs and catches details tired eyes might slide past. Imagine AI algorithms flagging a tiny lung nodule on a CT that would otherwise blend into the noise. The radiologist still makes the call, but computer vision helps them zero in faster. It’s even being used to track wound healing through photos, finally freeing nurses from the glamorous task of pulling out a ruler.

Each of these tools is already shaving minutes off charting, catching conditions earlier, and surfacing red flags that used to take hours of digging. Not robots with clipboards, just practical backup that helps humans do the work they actually signed up for.

The AI-Human Partnership

Let’s be clear: AI isn’t here to kick doctors out of the room. It’s here to catch patterns more quickly, cut the click-fest, and reclaim time that can be better spent on human-to-human conversations.

- Radiology Support: AI can pre-screen imaging studies and flag suspicious areas. That way, a radiologist starts with a short “look here” list instead of scrolling through hundreds of slices. The final call is still theirs. AI just helps them get there faster (and with less eye strain).

- Nursing Workflows: Some hospitals use AI to monitor vitals in real time and trigger sepsis alerts hours before symptoms escalate. The nurse doesn’t just nod and move on; they check the patient, confirm, then act. It’s like having a proactive colleague who never needs coffee breaks.

- Administrative Tasks: Claim coding and prior-auth paperwork can eat entire afternoons. AI can auto-suggest codes from chart content while staff double-check outliers. That trims hours off the revenue cycle without opening the door to bad claims.

The point? AI doesn’t cancel expertise; it amplifies it. It clears the clutter so your team can focus on the calls only humans can make, and on better healthcare delivery.

Key Implementation Considerations

AI isn’t plug-and-play in hospitals. If it were, we’d all be sipping coffee while the bots handled by now. To actually work in healthcare, a few big pieces have to click into place:

- Data Quality: AI only learns from the health data you feed it. If half the vitals are missing, notes contradict each other, or fields are left blank, the system will stumble. Train a readmission model on messy charts and it’ll flag the wrong patients. That’s why cleaning the source matters just as much as picking the tool.

- Integration of AI: A tool only earns its keep if it shows up where staff already live. Extra logins and pop-ups? Forget it. Adoption tanks. Picture a sepsis alert that lives on a separate dashboard. Nobody’s checking that at 3 a.m. The safer bet is wiring outputs straight into the electronic health records (EHR) screens staff already click. That’s how you build trust and avoid alert fatigue.

- Infrastructure: Under the hood, you need strong plumbing: secure APIs, FHIR-based connections, data protection standards, role-based access, audit logs. Skip that and you’re basically running water through a leaky hose.

Now let's look at how AI is already sharpening diagnoses and decisions.

Clinical Applications and Diagnostic Support

So let’s walk through the wins, the watch-outs, and whether the ROI holds up when the hype wears off:

Medical Imaging and Radiology

Medical imaging AI is software trained to read scans (X-rays, CTs, MRIs) and flag patterns worth a closer look. But it doesn’t replace the radiologist; it just sharpens their eyes and speeds up the workflow.

For context:

- DeepRhythmAI: In one study, it had a false-negative rate of just 0.3%, compared to 4.4% for human techs. Translation: the AI missed a handful of cases while humans missed hundreds.

- Stroke Imaging in the UK: University teams trained AI that was twice as accurate as human experts at spotting strokes and timing them. That’s critical, since treatment hinges on when the stroke happened.

- Aidoc’s Stroke Solution: In real deployments, it cut “door-to-puncture” time by ~38 minutes. That’s 38 minutes of brain function saved, the difference between walking out of the hospital or living with lifelong disability.

These tools don’t take control from the doctors. They triage the data, surf through the slices, and basically whisper, “start here.” Radiologists still confirm or override. The potential payoff is huge:

- Fewer false positives = fewer unnecessary follow-ups, less stress for patients, lower healthcare costs.

- Faster reads = reduced overtime and higher throughput for radiology teams.

- Earlier, sharper disease diagnosis = treatments that are often less invasive, less expensive, and more effective.

The U.S. AI in medical imaging market is projected to hit $2.93 billion by 2030. So the question isn’t if these tools join the workflow. It’s how fast you bring them in, and whether they land as backup tools or just another dashboard nobody clicks.

Disease Detection and Prediction

Here’s where AI feels like the coworker who somehow notices everything (minus the gossip). Instead of just looking at scans, it chews through labs, vitals, and patient history, catching the “uh oh” patterns long before they’re obvious. Take these cases for example:

- Mount Sinai’s ICU AI System: This development process was no side project; 108,000+ hours and $5.6 million went into it. Painful upfront, but the payoff was constant monitoring for malnutrition, deterioration, and even fall risk. Staff got early warnings before patients spiraled. And avoiding just one catastrophic ICU event? That saves both lives and millions.

- Norway’s Cancer Screening Program: AI here caught 92.7% of screen-detected cancers and flagged 40% of “interval cancers” that usually sneak past. Translation: tumors that would’ve gone unnoticed until too late were spotted through early detection, when treatment is simpler and survival odds soar.

- Sepsis Prediction Algorithms: These predictive analytics models run quietly in the background like a hawk. They don’t just spit generic alerts. They adapt to local patient populations, so the nudges get sharper over time. Health systems using them report double-digit drops in sepsis mortality. Sometimes those hours gained are the difference between a ward stay and a crash cart in the ICU.

On the floor, it feels like this: before the classic sepsis signs even show (plummeting blood pressure, labs going haywire), an alert whispers, “Hey, check bed 14.” You confirm, act early, and stop a spark from turning into a blaze. That translates to:

- Shorter ICU stays because you catch trouble sooner.

- Less invasive treatment since you’re not always playing catch-up.

- Better survival rates and fewer crushing costs for the system.

Bottom line? AI doesn’t replace the nurse’s gut instinct or the doctor’s judgment. It’s the extra set of eyes that never blinks, and sometimes it spots trouble just in time.

Clinical Decision Support Systems

Clinical decision support (CDS) tools are basically the extra brain you wish you had on a slammed shift. They live inside your workflow, surfacing the right info at the right time. Flagging risky drug combos, nudging you toward evidence-based treatments, or quietly reminding you of options you might’ve missed.

Here’s what the landscape of CDS looks like right now:

- Watson for Oncology: This one made headlines for cancer recommendations, sometimes matching tumor board decisions 96% of the time. Impressive, but not perfect. Transparency gaps and odd suggestions made clinicians hesitate. Lesson learned: if a tool doesn’t feel grounded and relevant, no one trusts it (no matter how flashy the brand).

- Scope-Limited Systems: Tools like UpToDate Expert AI keep it simple: peer-reviewed guidelines, evidence only, no wild guesses. When it flags a bad drug combo or points to a care pathway, clinicians know it’s rooted in solid sources. That earns trust faster than any glossy marketing slide.

- Integration: This is the deal-breaker. A CDS tool isn’t helpful if it lives in a separate portal. The good ones use FHIR / HL7 protocols and APIs to pop up suggestions right inside the EHR. With CDS Hooks, those nudges feel like part of the workflow instead of a clunky add-on.

And when it all clicks?

- Treatment planning sharpens: Tumor genetics run through global databases, evidence-based options land in minutes instead of hours.

- Drug safety improves: Real-time checks reduce human error before scripts are signed, boosting patient safety (and sparing hospitals the fallout).

- Junior staff gain confidence: They get guided, seniors still sign off, and care stays consistent across clinical practice without losing oversight.

Of course, none of this is free. Licensing, validation, training, customization; they all come with a bill. And trust is fragile. If the tool makes too many irrelevant calls, clinicians tune it out faster than a bad EHR pop-up.

But when CDS is well-implemented, it’s the quiet safety net. Not the star of the show, but the thing reducing adverse events from slipping through and giving providers that extra confidence every call is backed by evidence or clinical trials.

Patient Care and Monitoring Applications

This is AI up close and personal. Not humming away in a server closet, but strapped to a wrist, blinking on a bedside screen, or buzzing in an app before dinner. The goal? Keep patients safer, make care feel personal, and cut down the stress of wondering, “Is anyone paying attention?”

Remote Monitoring Solutions

Remote patient monitoring is exactly what it sounds like: keeping tabs on patients outside the hospital using connected medical devices plus a little AI horsepower. Instead of waiting weeks for the next appointment, you see what’s happening now. Care flips from “react when it’s bad” to “step in before it gets worse.”

We're talking about:

- Cardiac Wearables: Think Apple Watch with a medical degree. AI-powered ECG apps flag atrial fibrillation (and early signs that could precede a heart attack) before a patient even feels it. Setup’s simple: wear the patch, data streams to the cloud, AI scans it, and clinicians get the ping. The payoff? More quick saves, fewer 2 a.m. ER runs.

- Glucose Monitoring Apps: Tools like MySugr don’t just log numbers; they spot patterns. When a spike’s coming, they nudge with a “hey, walk it off” or “adjust that next meal.” Hospitals using them see fewer diabetes-related admissions, and patients finally feel like they’re steering, not riding shotgun.

- Hospital-to-Home Monitoring: Discharge shouldn’t feel like shoving someone off a cliff. Sensors track vitals like oxygen or weight, AI filters the noise, and staff only see the red flags. Heart failure programs using this cut readmissions by double digits. Patients sleep easier, medical professionals dodge alert fatigue, and CFOs smile at the avoided costs.

With tools like these, we’re talking fewer surprises, earlier saves, and way less white-knuckle waiting for families. For hospitals, it means smoother staffing and fewer revolving-door readmissions. Not bad for a few devices and some smart math.

Personalized Treatment Planning

This is where AI really earns its scrubs. Instead of treating everyone like “the average patient,” it pulls together labs, genetics, lifestyle, and history to shape a treatment plan for one person. No more generic advice, no more one-size-fits-all. Just precision medicine in practice:

- Oncology: Tumors are sneaky; what works for one patient can flop for another. AI compares a tumor’s genetic profile against massive case datasets and highlights treatment options most likely to hit. The oncologist still makes the call, but the shortlist gets a lot smarter. The result? Fewer trial-and-error rounds, quicker hits on effective treatments, and less wasted time (and money).

- Diabetes Management: Forget the old “just eat better” script. Apps like MySugr track diet, movement, and glucose in real time, then nudge patients with advice they can actually use. That coaching keeps people engaged and cuts down on crisis-level admissions. It’s proactive care instead of constant catch-up.

- Mental Health: AI-driven CBT apps adjust on the fly. If sleep’s the problem, it pivots to insomnia strategies. If anxiety spikes, the content shifts there. That makes therapy feel tailored, not like flipping through the same workbook everyone else got.

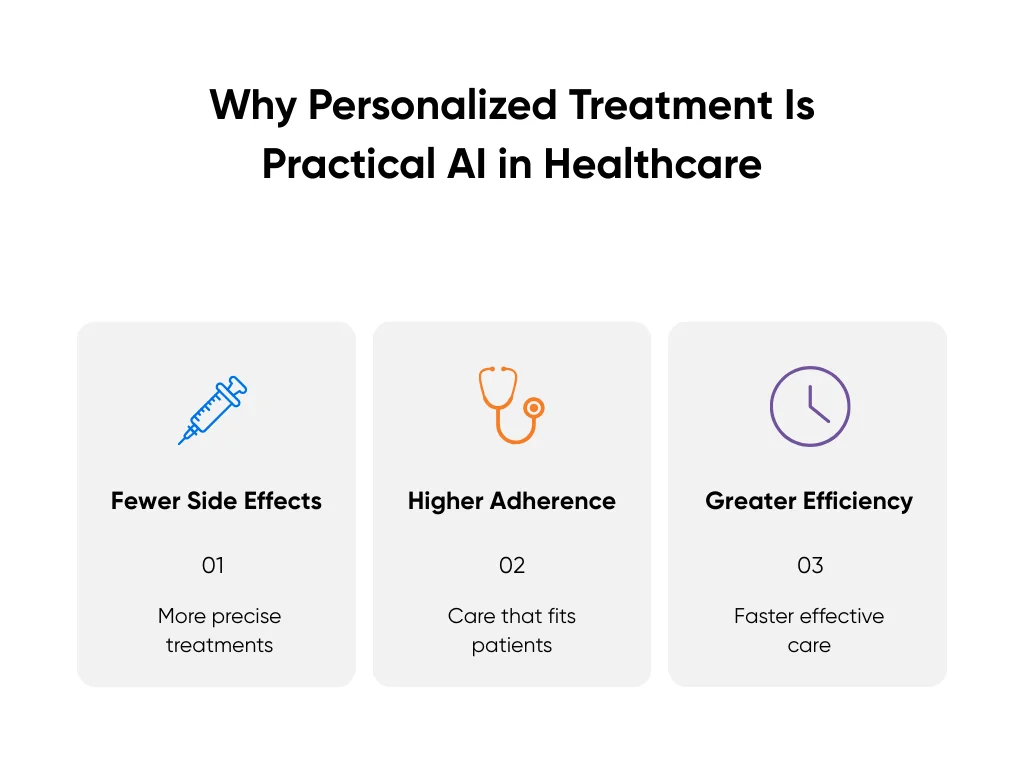

And the impact shows up fast:

- Fewer side effects because treatments are matched more precisely to patients.

- Higher adherence since patients see care plans that fit their lives.

- Greater efficiency for providers who can skip dead ends and move straight to what works.

That’s why personalized treatment is one of the most practical AI applications in healthcare. Patients get care that actually fits, providers skip the guesswork, and the system? It finally gets to breathe a little. Not bad for a bunch of algorithms in scrubs.

Patient Engagement and Communication

Here’s the sleeper hit: communication. Sometimes, patients just want to know someone’s paying attention. But healthcare professionals also want meaningful late-night calls. Here are a few concrete ways in which AI can solve both issues:

- Chatbots and Virtual Assistants: Tools like Ada Health or Babylon Health handle the routine stuff (symptom checks, FAQs, triage). Instead of a dozen midnight “is this urgent?” calls, AI filters who really needs a nurse now and who can safely wait. Clinics using them report shorter queues and calmer nights (and fewer frazzled nurses on 7 a.m. rounds).

- Medication Reminders: Missed meds are still one of the top reasons patients bounce back into the hospital. AI-powered apps ping at the right time, on the right channel (text, app, even a voice call). Some sync right into the EHR so nurses can see who’s sticking to the plan. One small nudge, fewer readmissions.

- Multilingual Support: A virtual assistant that actually speaks more than one language changes the game. A patient can get safe, clinical-grade instructions in Spanish or Mandarin in seconds instead of waiting days for a translator. Google Translate’s fine for menus, not for meds.

And when you add it up, the results are hard to ignore:

- Better Health Outcomes: Patients follow plans, flag problems early, and avoid preventable hospital trips.

- Staff Efficiency: Fewer unnecessary calls, fewer chart chases, more time for complex cases.

- Higher Satisfaction: Patients feel supported, clinicians feel less stretched. Everybody breathes easier.

Essentially, AI can act as a safety net that catches problems early and tailors care for patients in need. It can also help reduce friction for clinicians alike, as seen with our HIPAA-compliant medical transcription tool.

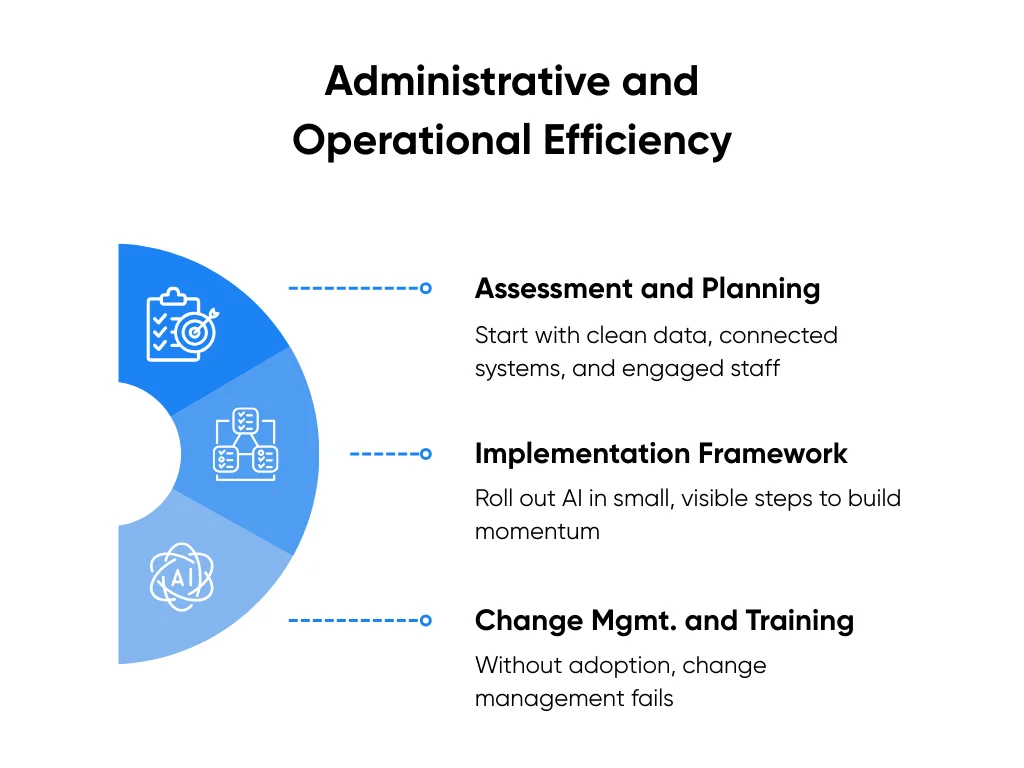

Administrative and Operational Efficiency

AI shines brightest when it takes the grunt work off your plate. Scheduling headaches, duplicate forms, billing screens that look like they haven’t been updated since dial-up; none of it’s glamorous, but it eats hours you’ll never get back.

That’s why many mid-sized healthcare organizations tap us through our healthcare AI development services. At Aloa, we sit with your team, untangle the mess, line it up with your EHR, and put guardrails in place so things don’t spin out.

So, how do you actually make AI stick without blowing up workflows?

Assessment and Planning

Before you kick off a pilot, ensure your setup can actually handle the load by running through three quick questions:

- What’s the target? Call out the one problem you want to fix. Smarter scheduling to trim no-shows? Cutting down on denied claims? Faster discharges? If you can’t say it in one line, you’re not ready yet.

- How healthy is the healthcare data? Where does it live, who owns it, and can you trust it? If half your blood pressure fields are blank, no algorithm is going to save you.

- Where does it fit? Do results show up inside the EHR or billing app your team already uses? Or in some extra tab nobody clicks? If staff have to chase it, adoption tanks.

Once you’ve got those answers, zoom out for a second. Is compliance in the loop? Budget carved out for integration (not just licenses)? Someone on deck to own the rollout day to day? These questions will quickly highlight where you need to build more guardrails.

For your first AI project, smaller is usually smarter. One focused, low-risk workflow builds trust faster than a dozen half-finished pilots. A great starter: using NLP to auto-summarize clinical notes. Harvard backs this up: people welcome AI when it cuts clicks instead of adding them.

And the fun part? Nail that first project and you won’t be the one pitching the next idea. Your team will lean in with, “Okay… what else can we hand off to AI?”

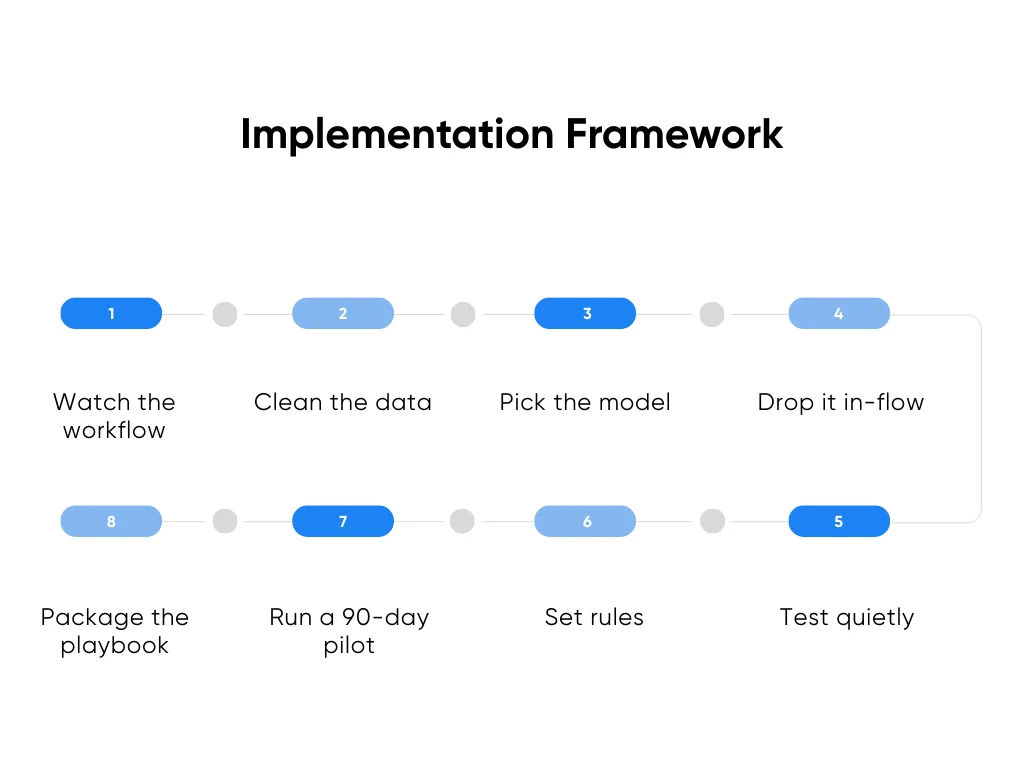

Implementation Framework

Rolling out AI works a lot better when you take it in small, visible steps. Try to flip the switch all at once and you’ll drown in edge cases. Take it bite-sized and you actually build momentum.

Here’s how to use AI in healthcare without breaking workflows:

- Watch the workflow: Sit with the people doing the job. Follow the clicks, the paper shuffling, the handoffs. That’s where you’ll spot the shortcuts that never make it into policy but matter for design. (Every hospital has them. Pretending they don’t exist is how projects tank.)

- Clean the data: Patch the obvious gaps in the clinical data fields you’ll use. If allergies, meds, or discharge codes are messy, fix them. And write down what you changed so nobody gets blindsided later.

- Pick the model: If there’s an off-the-shelf option that works, great. Use it. If your workflow is weird enough (and let’s be honest, most are), a custom build might be worth it.

- Drop it in-flow: Results should show up right inside the system staff already live in. If they need to flip to another window, adoption falls off a cliff.

- Test quietly: Start with a “silent pilot.” AI makes suggestions, humans still act. Compare side-by-side. That builds trust without risk.

- Set rules: Decide up front who owns updates, how errors are logged, and how drift gets flagged. If you don’t, it all gets ignored.

- Run a 90-day pilot: Keep it contained. Keep it contained. One department, one metric, weekly reports. Long enough to spot real patterns, short enough to change course if it flops. And if you’d rather not reinvent the wheel, Aloa’s AI consulting services are built for exactly this kind of pilot. We help teams define the scope, pick metrics that actually matter, and keep a feedback loop tight.

- Package the playbook: Don’t leave the lessons buried in someone’s inbox. Write down install steps, rules, and training notes. That way, the next department isn’t reinventing the wheel.

The thread here is simple: go small, measure fast, prove it works. Then scale. If you skip the “prove it” part, you’re just guessing. And guesses don’t fly in the healthcare sector. We even pulled together a no-fluff guide to integrating AI into medicine if you’re looking for a deeper dive.

Change Management and Training

This is the make-or-break stage. The model can be spot on, the integration airtight, but if people don’t actually use it? It’s dead on arrival. Change management isn’t a “nice-to-have” here. It is the project.

Here’s how we keep it real:

- Bring champions in early: Pull in nurses, physicians, and admins before anything goes live. Let them poke at the tool, stress-test it, and call out what doesn’t work. When staff help shape a system, they’re more likely to back it in the wild.

- Keep training light: Nobody wants a six-hour seminar. Give folks quick, role-based guides. Record a five-minute screen share. Host open office hours so people can pop in with questions. Make training something they can actually use between shifts.

- Have a safety net: Trust takes time to build but seconds to lose. If confidence dips or errors pop up, be ready to pause. Nothing builds credibility faster than showing you can roll back and fix issues before moving forward.

And don’t just measure adoption by logins or clicks. Treat it like you would patient outcomes:

- Minutes saved per task.

- Errors reduced in workflows like claims or discharge notes.

- Staff satisfaction scores moving in the right direction.

One more piece: bring patients into the loop. A short note in the portal (“This summary was prepared with AI and reviewed by your clinician”) builds trust faster than a glossy announcement. Patients want honesty, not hype.

And it’s the same for staff. If AI feels like it’s working with them, not on them, adoption won’t be a battle. It’ll just happen. (And yes, that’s the dream: fewer battles, more buy-in, and maybe even fewer grumbles at the nurses’ station.)

Key Takeaways

We’ve seen enough to know this: AI doesn’t win on promises; it wins on proof. Big visions sound great on a keynote stage. But inside a hospital, the only thing that matters is whether a tool actually makes the day easier. Start with one problem that makes people groan in staff meetings: the claims that keep bouncing back, the discharge notes that drag on forever, the no-shows that never stop. Run a small pilot, keep it safe, and watch what happens. If folks start asking, “can we do more of that?” That’s success.

If you want to sketch out what that first step could look like, let’s chat. Not ready yet? You can still jump into our AI Builder Community or check out our Byte-Sized AI Newsletter for quick updates on AI applications in healthcare that don’t feel like homework.

FAQs About the Use of AI in Healthcare

How do healthcare AI applications actually work in practice? Are they replacing doctors and nurses?

Nope. AI isn’t lining up to steal anyone’s job. What it does well is chew through data fast and spot patterns humans might miss. An X-ray tool might flag something that looks like pneumonia, but the radiologist still makes the call. A note assistant might auto-fill the basics so nurses spend less time typing and more time with patients. Think of it as backup, not a stand-in. (And honestly, no algorithm’s volunteering for a double shift in the ICU.)

What are the main barriers stopping wider adoption?

The big one: getting new tools to play nice with old systems. EHR integration can feel like untangling a box of Christmas lights you swore you rolled up neatly last year. Then pile on HIPAA, FDA approvals, upfront costs, and staff who’ve lived through clunky rollouts before. Plus, patient data is usually scattered across three systems and a filing cabinet. That’s where teams like ours at Aloa come in to make sure AI is wired cleanly into your stack and built to hit compliance from day one. Because once people see a tool actually save them time, attitudes flip fast.

How do AI clinical decision support systems fit into EHRs?

Most connect through APIs and standards like FHIR. That lets them pull data in real time and surface alerts or scores right inside the EHR. A sepsis model, for example, can watch vitals in the background and ping the team when numbers slip. The catch? Tune it right. Too many alerts and people stop listening. (We’ve all clicked past pop-ups that felt more like spam than help.)

What’s the realistic timeline for implementation?

It depends. Admin tools like scheduling assistants or chatbots? A few months. Clinical decision support? Six to twelve with testing and training. Complex diagnostic AI? Sometimes, over a year, thanks to regulators. The smarter move is to start with a 90-day proof of concept. Quick, scoped, and tied to one metric so you can prove value without risking disruption. From there, scale into full development once the team trusts the tool and the ROI’s clear.

That’s the approach we take at Aloa (consult, validate, scale), so leaders aren’t stuck betting big on untested ideas. Nothing wins over skeptics like a working pilot that saves time right now.