Emerging AI Trends That Will Shape the Next Decade

Chris Raroque

Co-Founder

Share to AI

Ask AI to summarize and analyze this article. Click any AI platform below to open with a pre-filled prompt.

In 2025, AI progress is outpacing expectations. Models improved by 67% in a single year. 78% of companies reported the use of AI, up from 55% the year before. The question is no longer if AI works, but how it will work for you.

Yet when you’re asked to modernize quickly, legacy systems, lean budgets, and limited expertise slow you down. Vendors keep pitching bold promises, but what you need are concrete wins.

At Aloa, we take you from vision to production. We partner with you to define scope and design solutions that fit your stack and workflows. Whether you’re exploring AI automation, scaling an existing platform, or building new, we ship smoothly and keep it stable in production.

This guide unpacks five emerging trends in artificial intelligence shaping the next decade:

- Multimodal systems that merge text, images, and video

- Decision intelligence that turns data into next-best actions

- Autonomous tools tackling routine tasks

- Collaboration aids that extend people’s impact

- Governance practices that make compliance operational

Each trend is tied to real use cases and adoption steps you can pilot in 90 days, so you know exactly where to focus first.

1. The Rise of Multimodal AI Systems

Multimodal AI combines text, images, audio, and video so systems can process them together for clearer insights. Doctors can match medical notes with scans in one step to speed up diagnosis. The same approach powers apps that enable customers to transition between voice, chat, and visual interactions in a single conversation. This leap forward delivers context-rich results, not single-channel guesses.

That ability comes from the way these systems are built. Under the hood, multimodal models use transformer architectures and neural networks to align signals from text, images, and audio into one representation. Let’s break down these technical foundations to see why this matters for your business.

Technical Foundations

The magic of multimodal AI comes from models that map different signals (words, images, sounds) into the same “language” of math. That shared space lets the system link them together.

For you, that could mean a chatbot can process a support ticket with an attached photo and voice memo in one pass. It doesn’t just read the text. It sees the blinking error light and hears the customer’s frustration, then produces a clearer next step. That’s something single-mode AI can’t do.

Vendors are already shipping enterprise-ready tools on these foundations:

- Document AI services can scan a contract, analyze the handwriting on a scanned page, and flag a missing signature in seconds.

- Meeting assistants now transcribe audio, summarize slides, and capture action items from video, all in one output.

These are real AI applications of the same transformer-based architectures powering the broader multimodal push.

Analysts put the multimodal AI market at $1.73 billion in 2024. Forecasts point to $10.89 billion by 2030, a 36.8% CAGR. That pace, plus $109 billion in private AI investment in 2024, shows companies are deploying, not just testing.

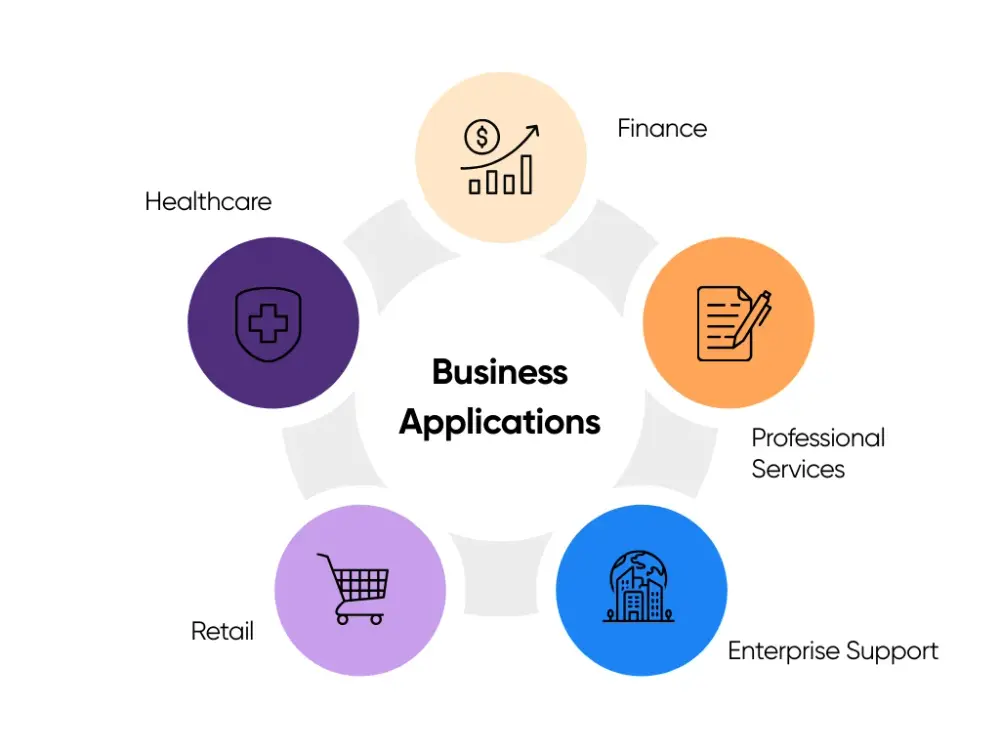

Business Applications

In the business context, multimodal AI proves its value by collapsing silos. For example:

- Healthcare: Hospitals use multimodal AI to pull together X-rays, lab results, and physician notes into one patient summary. Instead of paging through systems, a doctor gets a single view that flags risks. In pilot programs, this has cut diagnostic turnaround times and reduced missed correlations.

- Finance: Fraud teams analyze transaction logs alongside customer call transcripts. If a suspicious charge coincides with a nervous tone in a support call, the system raises the case for review. That blend of numbers and language helps reduce false negatives without overloading staff.

- Professional Services: Multimodal assistants combine client documents, whiteboard photos, and call notes to draft proposals with cited sources and flagged gaps. Work that took hours now takes minutes.

- Enterprise Support: Employees can type “calibrate Model X with the red sensor error,” and the AI responds with annotated diagrams, video clips, and a step list. That saves hours in troubleshooting and frees specialists to focus on more complex tasks.

- Retail: Amazon’s StyleSnap already uses computer vision with natural language to recommend fashion items from an uploaded photo. Mid-market retailers can apply the same principle: combine uploaded images with price filters or descriptions to personalize suggestions.

Multimodal AI’s rapid growth makes it clear we’re past trials and into business impact. Companies use it to cut bottlenecks, improve decisions, and smooth customer experiences. Mid-sized teams can get enterprise-grade intelligence without enterprise-level cost through Aloa’s hybrid model.

Implementation Strategies

The safest way to adopt multimodal AI is to start small and measure. A practical rollout path looks like this:

Select the use case: Start with a contained process such as claims review, IT help desk intake, or patient onboarding.

Define success metrics: Track first-contact resolution, average handling time, and reduction in manual effort.

Map inputs and privacy rules: Decide which data types (text, images, short clips) will flow in, and apply redaction for sensitive information.

Prototype quickly: Use low-code tools like Microsoft Copilot Studio to test basic agents.

Run a 90-day pilot: Deploy to a small group of users, gather feedback, and compare metrics to your baseline.

Harden for scale: Add retrieval-augmented search, monitoring, and access controls once results prove value.

You’ll want to see immediate signs of ROI: Contact centers should see fewer escalations. Field teams close tickets faster with annotated guides. Healthcare admins spend less time reconciling mismatched records.

Not every multimodal AI pilot will deliver an immediate return. That’s the beauty of starting small: even if ROI isn’t obvious, the pilot reveals whether the workflow is worth automating or if there is a deeper issue. In many cases, you will uncover the root problem and redirect efforts toward solving it, which can yield greater long-term benefits.

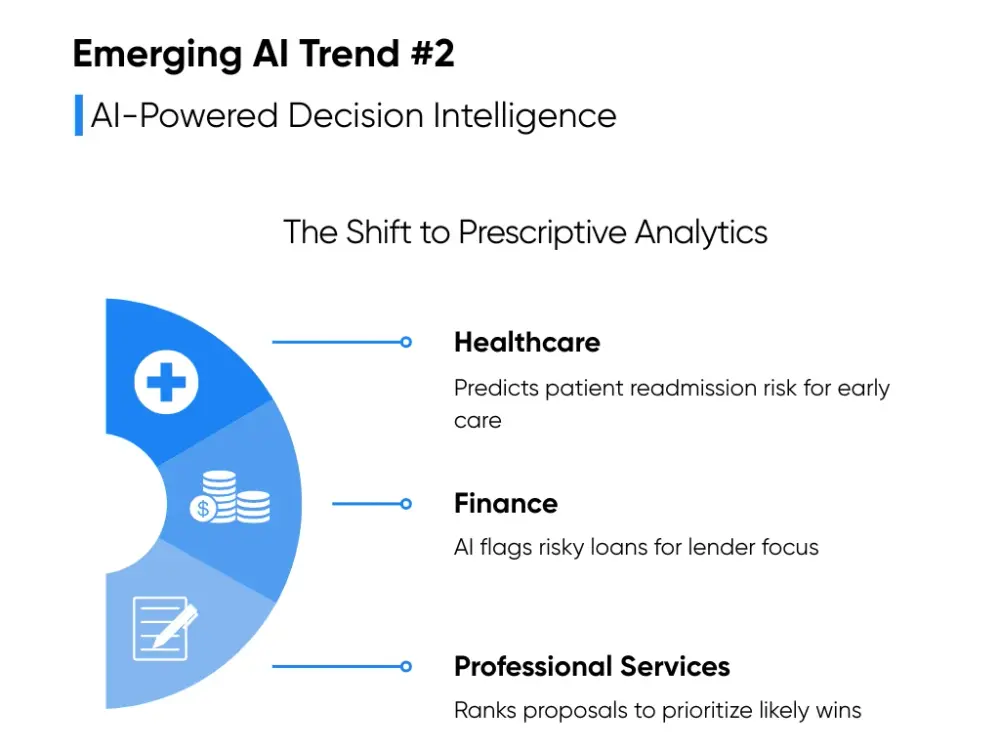

2. AI-Powered Decision Intelligence

Decision intelligence takes AI beyond reporting what happened to advising on what to do next. Instead of static dashboards that only show churn rates or revenue dips, these systems generate recommendations such as “call this account now,” “prioritize these patients,” or “adjust pricing here.”

Leaders in healthcare, finance, and professional services already use decision intelligence to make faster, smarter moves. And the promise isn’t just better analytics, but better outcomes that are traceable and defensible.

From Descriptive to Prescriptive

Until recently, AI lacked the context and reliability to move from insights to decisions. Advances in LLMs now enable recommendations that fit your business context. That’s why decision intelligence is moving from pilots to mainstream use in healthcare, finance, and professional services. In fact, the market reached $16.79 billion in 2024 and is on track for $57.75 billion by 2032, about 16.9 percent yearly growth.

For example, when traditional analytics would tell you “what happened,” decision intelligence can recommend, “Here’s the best next step, and here’s why.” Across industries, that shift is already reshaping daily work:

- Healthcare: Instead of catching risks only after patients are readmitted, decision intelligence combines vitals, appointment history, and social factors to flag those most likely to return. Your clinicians can focus follow-up on that list first to save time on low-risk cases and lower readmissions.

- Finance: Models pull credit scores, income proofs, bank transactions, and market signals, check for missing docs, and score each application. They rank the queue by risk so your underwriters can tackle high-risk files first and reduce defaults.

These prescriptive outputs can replace hours of combing through dashboards because they’re built on the same underlying data that businesses already use, but with more context and speed. Decision intelligence systems surface the why behind each recommendation, showing which signals (e.g., missed appointments, market shifts, client engagement) triggered the guidance. That transparency makes the recommendations traceable and reliable enough for teams to act on with confidence.

Human Oversight in Practice

AI may suggest, but human oversight is the safeguard that keeps decision intelligence practical. In different industries, that oversight takes different forms:

- Call Centers: Entry-level agents follow AI-prompted responses, while supervisors monitor a dashboard of flagged edge cases.

- Tax Advisory: Junior staff rely on AI to prioritize filings, while senior advisors validate outliers before submission.

- Software Engineering: At Goldman Sachs, developers using AI copilots saw a 20% productivity lift, but every line of code still went through peer review.

Studies on AI decision support indicate that the impact depends on the skill level. A joint Stanford–MIT study found that less experienced workers saw the largest productivity gains when following AI guidance, while more senior staff used the time savings to focus on higher-complexity cases. In the context of decision intelligence, this means frontline staff can act more confidently on prescriptive recommendations, while leaders leverage the transparency of those recommendations to shape strategy.

Ethics and Measurement

Decision intelligence lives or dies on measurable ROI. And in the coming years, it will be a defining factor in separating successful adopters from laggards.

But too often, companies deploy tools without tracking outcomes. That leaves AI outputs as “interesting” rather than business-critical. The smarter move is to embed feedback loops:

- Compare AI recommendations against actual results.

- Retrain models regularly with new data.

- Set thresholds that trigger mandatory human review.

- Maintain audit logs of every decision path.

58 percent of data and AI leaders reported exponential efficiency gains from generative AI adoption. But only a fraction measures productivity rigorously. Without hard numbers, you can’t prove or improve ROI.

Decision intelligence works best when scoped to the right decisions, measured against real benchmarks, and paired with human oversight. Start small; prioritize high-value decisions in healthcare triage, financial risk, or client pipelines. Then measure, adjust, and scale. Treated this way, it becomes a trusted guide that helps your team act with confidence, not just observe with dashboards.

3. Autonomous AI Systems

Autonomous AI refers to software agents that can pursue a defined goal with minimal human input. They’re focused assistants that handle narrow, repeatable tasks inside your business. But the reality today is controlled autonomy, not free rein.

Mid-sized firms are deploying autonomous AI where the stakes are low but the payoff is real:

- Healthcare IT: Agents manage support tickets such as resetting EHR access or guiding staff through software updates. Instead of clogging the help desk queue, routine issues resolve instantly, and clinicians wait less for technical fixes.

- Finance Operations: Internal agents pull transaction data, reconcile spreadsheets, and generate daily exception reports. Analysts then review flagged anomalies. The time saved lets staff focus on investigating genuine fraud cases.

- Professional Services: Firms use project-tracking agents that monitor task deadlines, alert teams when deliverables slip, and prepare weekly status updates. What once took hours of manual coordination now runs in the background.

- Customer Support: Some retailers test autonomous draft responses for “where is my order?” queries. Supervisors still approve outgoing messages, but first-response times have dropped significantly.

Charles Lamanna of Microsoft calls these agents “the apps of the AI era.” They’re bite-sized, purpose-built tools that slot into workflows, supporting different business functions without replacing decision makers.

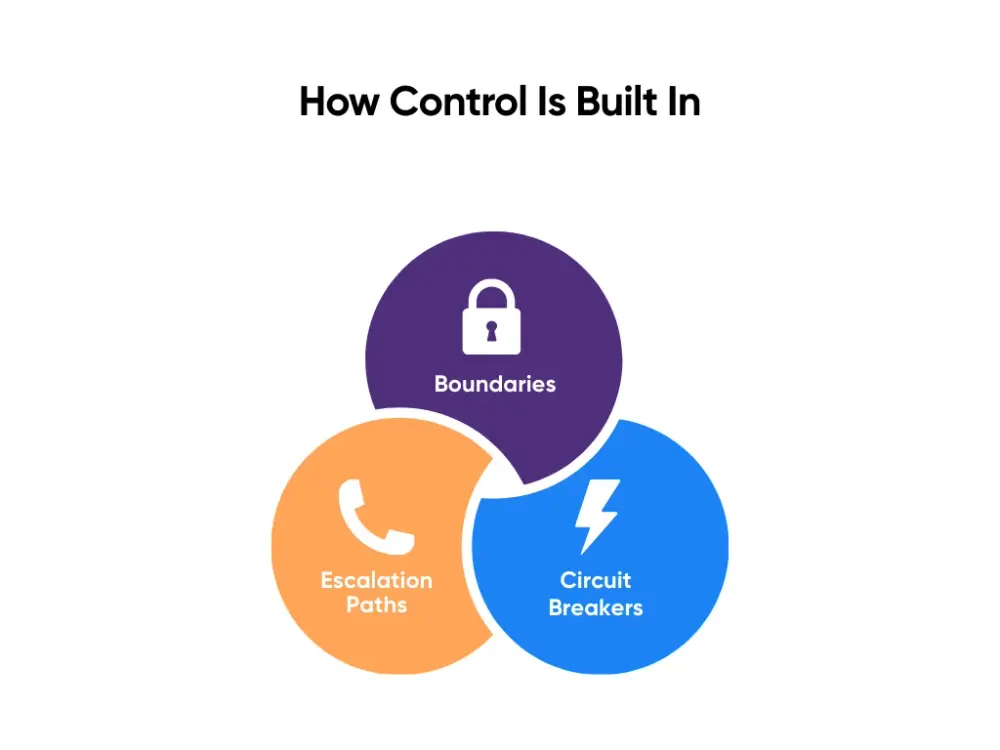

How Control Is Built In

Autonomy doesn’t mean absence of control. Smart deployments include layers of protection:

- Boundaries: Agents are limited to defined domains, like IT tickets or HR FAQs. They don’t touch financial approvals or compliance exceptions.

- Circuit Breakers: If outputs fall below a confidence threshold, the agent stops and kicks the case back to a human.

- Escalation Paths: Every system has a named reviewer who steps in when the AI is uncertain.

In healthcare, this might mean an IT agent that stops if an access request involves protected health information. In finance, it might be an alert when an agent encounters an unfamiliar transaction type. These controls make autonomy safe enough to trust.

What's the Impact on ROI and Workforce?

The wins are tangible:

- Automating IT resets frees tech staff for security projects.

- Automating weekly reconciliation saves finance teams hours of late-night number-crunching.

- Automating project reminders in professional services keeps utilization rates higher with no added admin.

These gains compound. Over a quarter, reclaimed hours translate into lower operating costs and faster service. But as the MIT Sloan Review notes, don’t expect autonomous AI to handle unsupervised money flows or compliance-critical decisions any time soon.

The best way forward is to treat autonomy as a set of assistants, not replacements. Start with narrow workflows where errors are low-risk and measurement is clear. Layer in guardrails, run 90-day pilots, and only then scale. This is how we do it at Aloa, turning autonomous AI into dependable software in your stack.

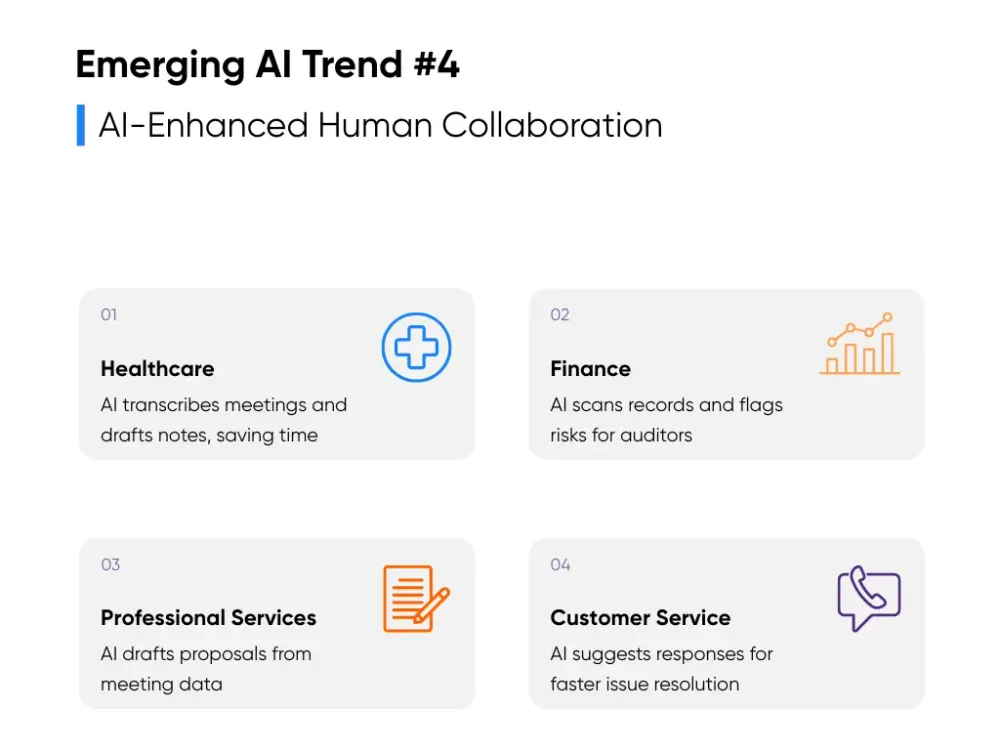

4. AI-Enhanced Human Collaboration

AI-enhanced collaboration is about amplifying what people do best, not replacing them. Instead of handing off entire jobs, these tools handle prep work, surface context, and suggest options so teams can focus on judgment, creativity, and client relationships.

And here's where collaboration shows up today:

- Healthcare: During multidisciplinary care meetings, AI assistants transcribe discussions, pull relevant labs and imaging, and generate discharge notes. Clinicians save hours on paperwork, and patients leave with clearer follow-up instructions.

- Finance: Audit teams use copilots like GitHub Copilot to scan through thousands of compliance records, auto-fill standard sections of reports, and flag anomalies. Instead of wading through forms, auditors spend their time on higher-risk cases that need expertise.

- Professional Services: Consulting teams feed in workshop transcripts, whiteboard snapshots, email threads, and other content creation assets. The AI drafts an outline for a client proposal, pulling out key priorities. Partners refine strategy rather than starting from a blank slide deck.

- Customer Service: Agents fielding live calls get AI-suggested responses based on past tickets and product manuals. Instead of putting a customer on hold to dig through knowledge bases, they resolve issues on the spot.

Why Adoption Depends on Culture

When it comes to humans collaborating with AI, the biggest barrier isn’t tech; it’s trust. Over 90% of leaders said cultural resistance is the main obstacle to becoming truly data- and AI-driven. Employees worry about AI accuracy as well as their own job security. Managers fear liability.

For AI to fully replace humans, jobs would have to be highly repetitive. But a project manager today might step into client strategy tomorrow. A financial analyst might shift from running reports to leading vendor negotiations. Businesses constantly reconfigure their teams around new goals, and individuals adapt their skills accordingly.

That flexibility is exactly why AI is a complement, not a substitute. With the right culture, AI collaboration tools can become the teammate everyone trusts. Here are a few practical steps that may help collaboration stick:

- Role-Specific Training: Show clinicians how AI assistants generate discharge notes but not final diagnoses, or teach auditors that copilots can pre-fill forms but not sign them off. This clarity reduces misuse and builds confidence.

- Built-In Transparency: Configure tools to show the data source behind each suggestion, display confidence scores, and always allow staff to edit or reject outputs. That way, AI becomes an aid, not an unchecked authority.

- Outcome-Based Tracking: Measure the effect on turnaround times, error rates, and client satisfaction. For example, compare proposal win rates before and after AI-assisted drafting, or measure how many customer queries are resolved on the first call.

5. Ethical AI and Governance

In 2024, U.S. regulators introduced nearly 60 new AI-related actions, twice as many as the year before. The EU is finalizing its AI Act. Countries from Canada to Singapore are setting disclosure rules. For mid-sized firms, this is the compliance environment you’ll operate in.

Ethical AI is about ensuring that gains (faster claims, cleaner audits, smarter client deliverables) don’t turn into liabilities. It’s recommended to start with risk-tiered use cases, layer in guardrails from the outset, and expand only when controls remain steady. Done this way, AI makes you not just competitive, but credible.

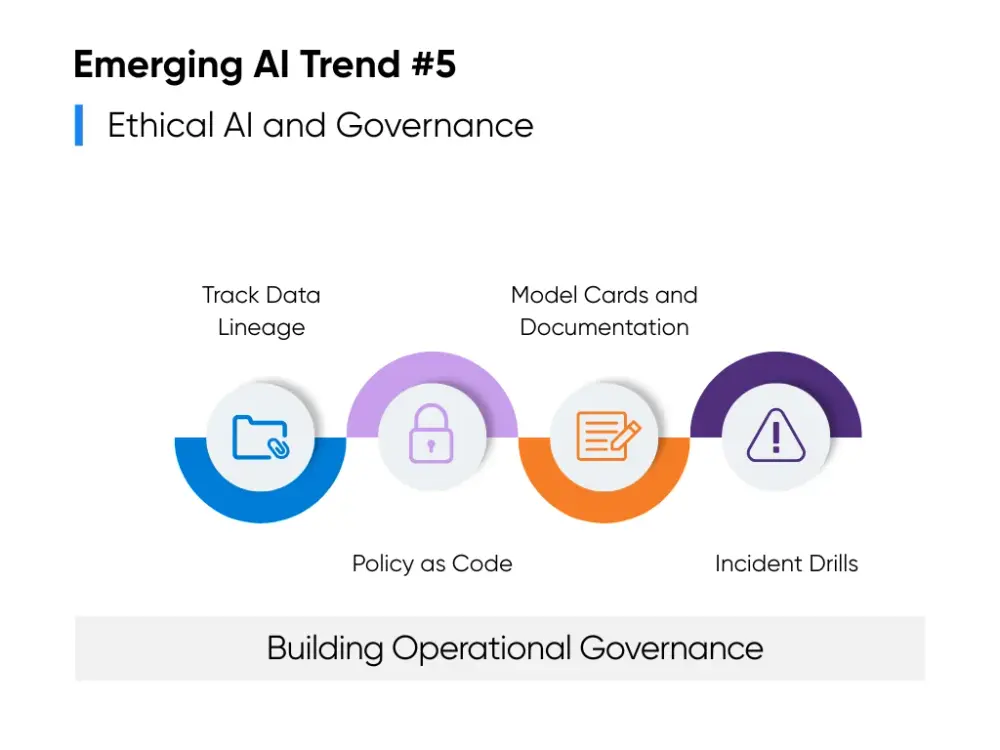

Building Operational Governance

Governance doesn’t start with glossy policies. It starts with practices you can implement today:

- Track Data Lineage: Hospitals are required to log which data sources feed diagnostic models to meet HIPAA audits. In finance, lineage reports help prove that anti-fraud models don’t pull from unverified sources.

- Policy as Code: Banks configure systems to block autonomous agents from sending customer communications without supervisory approval. It’s not a memo; it's a safeguard built into the software.

- Model Cards and Documentation: Professional services firms publish plain-language summaries of their AI models, which outline limits and risks. This transparency reassures both clients and regulators.

- Incident Drills: Healthcare providers run “bias drills” where teams review cases flagged as problematic. By simulating a failure, they’re ready if regulators or patients raise concerns.

These are the kind of basics that reduce rework and build audit readiness before problems surface.

Managing Risk and Trust

Strong governance is reinforced by modern risk frameworks that account for related risks such as bias, data leakage, or model drift. Benchmarks like HELM Safety and AIR-Bench measure accuracy and robustness. Inside organizations, leaders are pairing them with safeguards such as:

- Confidence Thresholds: Customer service bots escalate to humans below 80% certainty.

- Approval Workflows: Finance teams require dual sign-off before AI-generated reports are released.

- Continuous Monitoring: Dashboards track model drift, with retraining triggered when accuracy drops.

Public trust is equally important. While 80% of people in Indonesia and China see AI as a net positive, only about 40% of U.S. respondents say the same. That skepticism shows up in your employees and your clients. Firms that counter it succeed and build long-term value creation by making AI use transparent, allowing staff to override outputs, and giving customers ways to contest automated decisions.

This is also where outside expertise helps. With Aloa’s AI consulting and digital transformation services, governance is built into pilots from day one. We design escalation paths, monitoring dashboards, and audit-ready documentation so adoption builds confidence instead of risk.

Key Takeaways

The five emerging trends in artificial intelligence we’ve covered are now being deployed across industries. They’re proving their worth through faster claims review, smarter decision support, streamlined collaboration, and safer compliance practices that highlight the future of AI as a practical driver of growth.

Your next move should be to focus on stack-friendly pilots that can deliver measurable outcomes within 90 days. Start small, track clear metrics like turnaround time or client satisfaction, and build governance into every rollout. Scale only when results are consistent and controls hold firm.

At Aloa, we help teams put this into practice. From custom AI solutions to chatbots and AI agents and AI consulting for governance, we design systems that fit your stack, deliver ROI, and stay compliant. If you’re ready to prove value and scale responsibly, schedule a consultation with Aloa.

FAQs About Emerging Trends in Artificial Intelligence

Why should business leaders pay attention to AI trends now?

AI is advancing at a pace most companies haven’t seen before. New capabilities are emerging monthly, not yearly. Early adopters are already reporting 20–30% improvements in efficiency and decision-making speed, and the AI market is projected to hit $1.8 trillion by 2030. Waiting risks falling behind.

How quickly are these AI trends being adopted by businesses?

Adoption is accelerating. Multimodal AI systems are already in use at more than a third of Fortune 500 firms, and autonomous AI pilots are running in over half of major enterprises. Decision intelligence tools have grown 400% in adoption in just two years.

What are the most practical business applications of multimodal AI right now?

Companies are using multimodal AI to resolve service tickets with photos plus text, create marketing content with text, images, and video, and inspect products using both visual and sensor data. Manufacturing firms even use it to catch equipment issues early by analyzing noise and vibration patterns.

How do we ensure AI decision-making systems remain accurate over time?

Accuracy depends on monitoring and feedback. Compare predictions against actual results, retrain with new data, and set thresholds for human review. Successful teams also use alerts when confidence drops, ensuring significant decisions always have human oversight.

Are autonomous AI systems safe for business-critical operations?

Yes, when implemented carefully. The safest agents are restricted to small, structured tasks such as IT password resets or HR queries. Strong controls (like circuit breakers, escalation protocols, and strict boundaries) keep risk low while allowing confidence to build.

How can AI enhance rather than replace human workers?

AI handles repetitive or data-heavy work, giving people more time for strategy, relationships, and problem-solving. It can answer first-line customer inquiries while humans manage complex cases, generate first drafts for reports, or surface insights that people then interpret.

Should we build AI capabilities in-house or partner with external providers?

Most companies benefit from a hybrid approach. External partners provide specialized expertise and infrastructure, while internal teams handle customization and ongoing use. This is where Aloa adds value: delivering expert AI development services while transferring knowledge so your team builds long-term capability.

What industries are seeing the most immediate impact from these AI trends?

Healthcare has seen a surge, with over 200 AI-enabled medical devices approved in recent years. Finance is using AI for fraud detection and customer care. Manufacturing relies on AI for predictive maintenance, and retail for personalized recommendations. Even transportation has scaled AI, with autonomous ride services completing hundreds of thousands of trips weekly.

What’s the real cost-benefit of implementing these AI trends?

Costs are dropping fast while benefits rise. The cost of running GPT-3.5-level systems fell 280-fold in two years, and hardware costs are declining 30% annually. On the benefit side, companies report faster resolution times, lighter agent workloads, and measurable productivity gains. Goldman Sachs saw a 20% lift in developer output.

How are small businesses supposed to compete with enterprises in AI adoption?

AI is becoming more accessible every year. Open-weight models are closing performance gaps with closed models, and no-code platforms let non-technical staff build simple agents. Small businesses can focus on targeted use cases and tap into providers like Aloa to access enterprise-grade capabilities without enterprise-level spend.